LINSTOR®, the software behind LINBIT®’s software defined storage solutions, can be integrated into many different platforms and many different environments. Most of LINSTOR’s platform integrations require that a LINSTOR cluster be configured before you’re able to install our respective driver and dynamically create replicated DRBD® volumes using said platform.

While we at LINBIT are very familiar with how to quickly stand up a LINSTOR cluster and which knobs to turn when, that might not be the case when you’re first starting out with LINSTOR. So, a long time ago in an office space not that far away, I put an Ansible playbook that I used for testing LINSTOR in various environments out on GitHub, where it lived happily for anyone (with a LINBIT evaluation or customer account) to quickly stand up test clusters of their own.

Since originally sharing my playbook, some things have changed that necessitated an update; CentOS is no longer RedHat Enterprise Linux compatible, and RHEL is no longer open source (or something like that) so RHEL compatible variants might become incompatible soon… I’m not sure about that, but updates were needed regardless, and so, here we are.

The new updates add support for Ubuntu 22.04 as well as updating the RHEL support to include versions 8 and 9, and also the added ability to control which LINSTOR satellites will contribute unused block storage to the LINSTOR storage pool.

Using Ansible to Configure a LINSTOR Cluster

How do you use it? Well first, you’ll need a local copy of the linstor-ansible playbook. Use git to clone a local copy of the playbook’s Github repository.

$ git clone [email protected]:LINBIT/linstor-ansible.git

Next, you’ll need a couple of VMs or physical hosts to deploy onto. As mentioned in playbook’s README, the following pre-requisites must be met on the target nodes:

- All target systems must have passwordless SSH access.

- All hostnames used in inventory file are DNS resolvable (or use IP addresses).

- Target systems are RHEL 7/8/9 or Ubuntu 22.04 (or compatible variants).

Add the IP addresses or DNS resolvable hostnames to the playbook’s inventory file, which is the hosts.ini in the linstor-ansible checkout. An example inventory file might look like this:

[controller]

192.168.222.250

[satellite]

192.168.222.250

192.168.222.10

192.168.222.11

192.168.222.12

[linstor_cluster:children]

controller

satellite

[linstor_storage_pool]

192.168.222.10

192.168.222.11

The example inventory above would result in the 192.168.222.250 node being configured as a LINSTOR controller for the cluster, but it will be able to be assigned volumes, since it is also listed as a LINSTOR satellite. Only nodes 192.168.222.{10,11} will contribute block storage to the thin LVM LINSTOR storage pool created by the playbook, and therefore will contain the physical replicas that our remaining satellite nodes attach to “disklessly” using DRBD’s diskless attachment. All satellite nodes, including our “combined” LINSTOR controller and satellite, will have a file backed storage pool created on them.

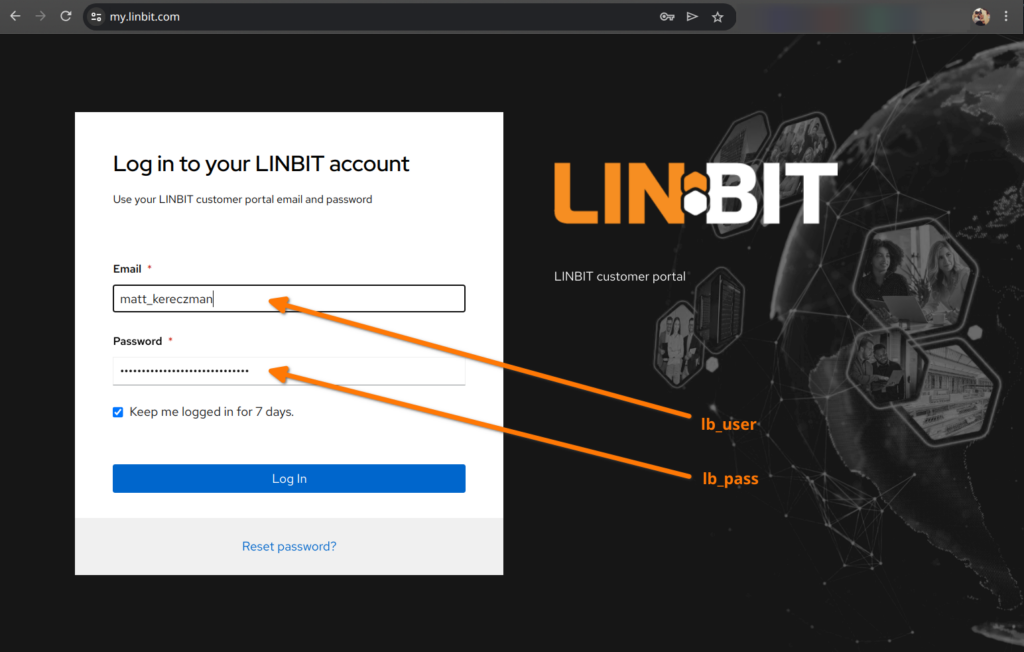

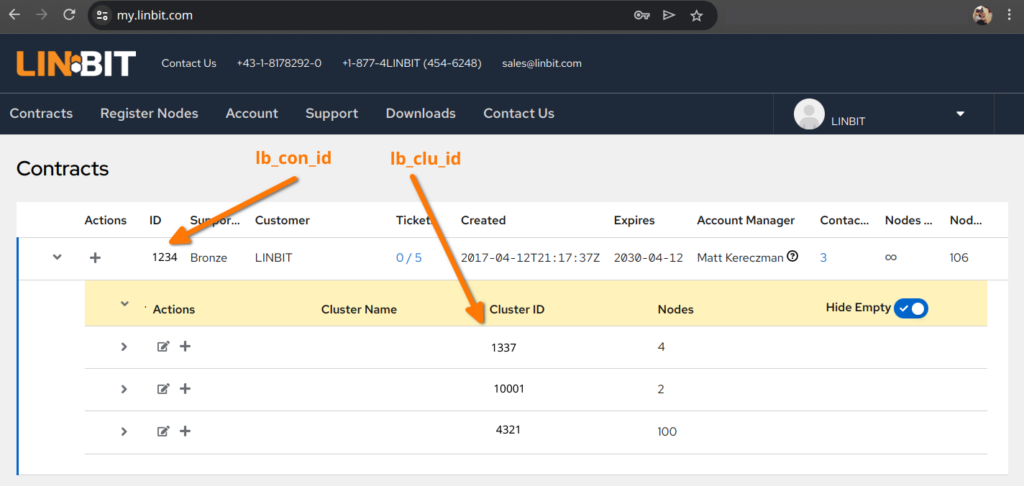

The last thing you’ll need to do before running the playbook is configure the options for the playbook. These are found in the group_vars/all.yaml file of your linstor-ansible checkout. This is where you configure which user Ansible will use to configure the target systems, which network LINSTOR and DRBD will use to communicate and replicate over, as well as the LINBIT customer or evaluation account credentials you use to login to https://my.linbit.com. The cluster and contract ID’s can be found in the contracts page of the LINBIT customer portal as pictured below.

Any of the nodes you want to participate in LINSTOR’s thin LVM storage pool – these are all nodes listed under the linstor_storage_pool node group – will need to have an unused block device attached to them, which is what you must set the drbd_backing_disk variable to.

TIP: The drbd_backing_disk variable can be appended to a hosts entry in the inventory file to override the value set in group_vars/all.yaml. For example, if one of your nodes has an unused /dev/sdc while all other nodes have an unused /dev/sdb, you can list that node in the inventory as: 192.168.222.11 drbd_backing_disk="/dev/sdc"

Now you’re ready to run the playbook.

$ ansible-playbook site.yaml

What Now?

Assuming all went well, you should have a basic LINSTOR cluster setup for you to test with! Log into any of the nodes and you should be able to use the LINSTOR client to take a look around:

# linstor node list; linstor storage-pool list

Create a resource-group backed by the lvm-thin storage-pool, or you can use the file-thin pool if you did not add nodes to the linstor_storage_pool node group, and spawn a volume from it:

# linstor resource-group create my_rg --storage-pool lvm-thin --place-count 2

# linstor volume-group create my_rg

# linstor resource-group spawn-resources my_rg my_res 4G

You could also integrate this storage cluster into one of the platforms listed below using the respective integration’s drivers and documentation:

- LINSTOR ProxMox plug-in

- LINSTOR Docker volume plug-in

- LINSTOR CSI driver for Kubernetes

- LINSTOR Gateway and DRBD Reactor

If you have any questions or trouble using the playbook mentioned in this blog, or with getting any of the aforementioned integrations working, don’t hesitate to reach out to me in the LINBIT community. You can also use the contact us form if you need to start an evaluation or contact sales to setup a meeting.