Attaching an NFS share to your Proxmox cluster is a convenient way to add reliable storage for backups, ISO images, container templates, and more. If LINSTOR® is already an integral part of your Proxmox cluster, LINSTOR Gateway makes it easy to create highly available NFS exports for Proxmox.

In a recent video, we covered 10 Things You Can Do with LINSTOR and Proxmox. The 5th topic highlights deploying NFS with one command. In this blog post, we’ll cover the prerequisites and configuration required to create an NFS export for use with a Proxmox cluster.

Assumptions

- You have a working Proxmox Virtual Environment (PVE) 8.X cluster with three or more nodes.

- LINSTOR is installed alongside Proxmox. Each Proxmox node is also a member of a LINSTOR cluster. If you need to install and configure LINSTOR for Proxmox, you can follow our blog post as an installation guide.

- The LINSTOR controller is currently configured as a single instance and is not highly available.

- The configuration steps below need to be performed on all nodes in the cluster unless specified otherwise.

- All commands are run under the root user account or prefixed with

sudo.

Required Packages

apt -y install drbd-reactor iptables nfs-common nfs-kernel-server psmisc resource-agents rpcbindNOTE: Packages such as iptables and psmisc should already be installed with a default Proxmox installation, but might not be present with a base Debian installation.

Installing LINSTOR Gateway

If you are a LINBIT customer, you can simply install LINSTOR Gateway by using an apt command:

apt -y install linstor-gatewayIf you’re using the LINBIT Public Repositories for Proxmox, you can install LINSTOR Gateway by fetching the latest binaries from GitHub:

- Fetch the latest release from GitHub:

wget -O /usr/sbin/linstor-gateway \ https://github.com/LINBIT/linstor-gateway/releases/latest/download/linstor-gateway-linux-amd64 - Set execute permissions:

chmod +x /usr/sbin/linstor-gateway

Portblock Resource Agent Workaround

On Debian systems, a workaround is currently needed due to an interaction between iptables version 1.8.9 and the portblock resource agent. However, the latest upstream resource agents are patched to handle this interaction and can be used as a workaround. After iptables package version 1.8.10-4 is released for Debian stable, this workaround will no longer be necessary.

- Check for available versions of

iptables:apt update && apt-cache madison iptables - If

iptables1.8.10-4 or newer is unavailable, copy the portblock resource agent from upstream to each node:wget -O /usr/lib/ocf/resource.d/heartbeat/portblock \ https://raw.githubusercontent.com/ClusterLabs/resource-agents/main/heartbeat/portblock

Configuring LINSTOR Satellite Nodes

LINSTOR Gateway requires some minor configuration changes to various components on each LINSTOR satellite node.

- Create and edit the file

/etc/linstor/linstor_satellite.toml. Paste the following LINSTOR satellite configuration options into this file:[files] allowExtFiles = [ "/etc/systemd/system", "/etc/systemd/system/linstor-satellite.service.d", "/etc/drbd-reactor.d" ] - Restart the LINSTOR satellite service for the configuration changes to take effect:

systemctl restart linstor-satellite - Configure DRBD Reactor to automatically reload itself after configuration changes by creating, enabling, and starting a systemd service to do this.

cp /usr/share/doc/drbd-reactor/examples/drbd-reactor-reload.{path,service} /etc/systemd/system/ systemctl enable --now drbd-reactor-reload.path - Disable NFS. After installing the NFS server components, the NFS server service automatically starts and enables itself. Going forward, you need to stop and disable the NFS server service on each node, allowing DRBD Reactor to exclusively control the NFS server service:

systemctl disable --now nfs-server.service

Configuring LINSTOR Gateway

LINSTOR Gateway has a server component that will run side-by-side and interface with the LINSTOR controller service.

- On the LINSTOR controller node only, create the unit file

/etc/systemd/system/linstor-gateway.servicewith the following contents:[Unit] Description=LINSTOR Gateway After=network.target [Service] ExecStart=/usr/sbin/linstor-gateway server --addr ":8337" [Install] WantedBy=multi-user.target - Reload systemd unit files so that systemd has knowledge about the

linstor-gatewayservice that you just created. Then enable and start the newly definedlinstor-gatewayservice:systemctl daemon-reload systemctl enable --now linstor-gateway

💡 TIP: You can skip these steps if you installed LINSTOR Gateway from a package repository. The

linstor-gatewaypackage ships with this service file. You only need to verify that the service is enabled and started.

For each LINSTOR satellite node, LINSTOR Gateway can perform a check for various requirements and dependencies by running linstor-gateway check-health on each node:

Checking agent requirements.

[✓] System Utilities

[✓] LINSTOR

[✓] drbd-reactor

[✓] Resource Agents

[✓] iSCSI

[!] NVMe-oF

✗ The nvmetcli tool is not available

exec: "nvmetcli": executable file not found in $PATH

Please install the nvmetcli package

Hint: nvmetcli is not (yet) packaged on all distributions. See https://git.infradead.org/users/hch/nvmetcli.git for instructions on how to manually install it.

[✓] NFS

FATA[0000] Health check failed: found 1 issuesBecause this setup in this article does not use NVMe-oF (or iSCSI for that matter), you can safely ignore the warnings above. All requirements are satisfied for creating NFS exports.

Deploying NFS With One Command

At this point, you are ready to deploy NFS with a single command. Run the following command on the LINSTOR controller node to create a 100GiB NFS share with a virtual IP address of 192.168.222.140:

linstor-gateway nfs create iso_storage 192.168.222.140/24 100GBecause of the virtual IP address, the highly available NFS export will always be available at 192.168.222.140:/srv/gateway-expors/iso_storage regardless of which node is actively serving NFS.

💡 TIP: The

--resource-group <rg_name>parameter is often provided with the above command to target a specific LINSTOR resource group for NFS exports. In a production environment the NFS share might use different backing storage (such as slower, spinning storage) represented by a specific resource group.

Checking NFS Status

To view the NFS exports created by LINSTOR Gateway, simply run linstor-gateway nfs list:

+-------------+--------------------+---------------------+----------------------------------+---------------+

| Resource | Service IP | Service state | NFS export | LINSTOR state |

+-------------+--------------------+---------------------+----------------------------------+---------------+

| iso_storage | 192.168.222.140/24 | Started (proxmox-0) | /srv/gateway-exports/iso_storage | OK |

+-------------+--------------------+---------------------+----------------------------------+---------------+You can view DRBD Reactor’s status on each node by running drbd-reactorctl status:

/etc/drbd-reactor.d/linstor-gateway-nfs-iso_storage.toml:

Promoter: Currently active on this node

● drbd-services@iso_storage.target

● ├─ drbd-promote@iso_storage.service

● ├─ ocf.rs@portblock_iso_storage.service

● ├─ ocf.rs@fs_cluster_private_iso_storage.service

● ├─ ocf.rs@fs_1_iso_storage.service

● ├─ ocf.rs@service_ip_iso_storage.service

● ├─ ocf.rs@nfsserver_iso_storage.service

● ├─ ocf.rs@export_1_0_iso_storage.service

● └─ ocf.rs@portunblock_iso_storage.serviceAdding Highly Available NFS to Proxmox

Open the Proxmox web interface (https://<node_address>:8006) on any node and then make the following configuration changes.

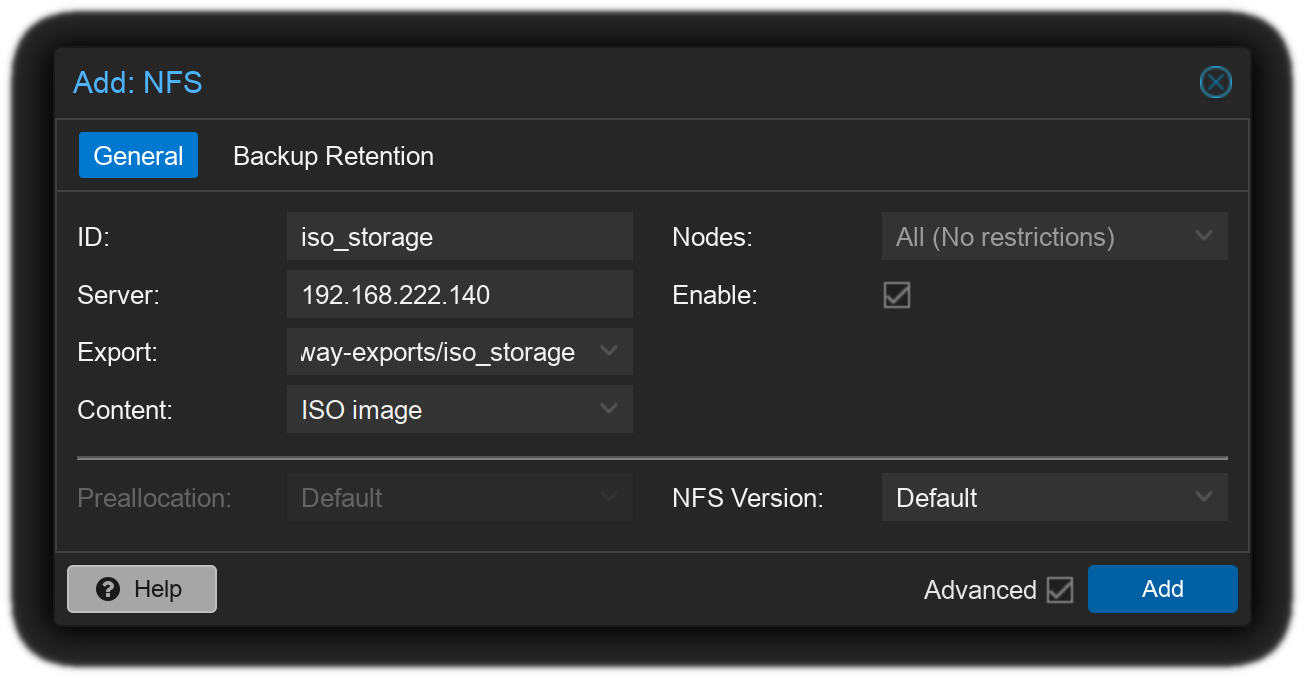

- Navigate to Datacenter -> Storage -> Add -> NFS.

- Enter NFS share settings:

key value ID iso_storage Server 192.168.222.140Export /srv/gateway-exports/iso_storageContent ISO image

In the example above you are creating an NFS share exclusively for ISO images. Of course, you can add a more generalized NFS share with additional content types such as backups, container templates, and more.

📝 NOTE: Selecting Disk image or Container content types is not recommended unless LINSTOR storage is only available to Proxmox via NFS. However, it is recommended to simply use the LINSTOR Proxmox plugin and configure each Proxmox node as a LINSTOR satellite. This allows for disk images and persistent container data to be backed by individual DRBD® resources and will provide better performance over NFS.

💡 TIP: With hyperconverged storage, it might be necessary to delay the start of VMs and containers after booting Proxmox nodes. Consider a virtual machine (VM) that will fail to boot if it utilizes disk images or ISO images from an unavailable NFS share. When powering up a combined Proxmox and LINSTOR cluster after a power loss event, NFS will need to be available before booting a VM that relies on it. To delay booting VMs by 30 seconds throughout the cluster, run the command:

pvenode config set --startall-onboot-delay 30on all nodes as documented in Proxmox node management.

Conclusion

While there are a few extra configuration steps required to configure LINSTOR Gateway on Proxmox (Debian) nodes, the benefits of adding highly available NFS shares to a Proxmox Virtual Environment are worth the effort.

The extra components used in addition to a standard LINSTOR cluster include:

- NFS server components

- Open Cluster Framework (OCF) resource agents adapted from Pacemaker for managing virtual IP addresses and portblock resources to smooth over failover between nodes

- DRBD Reactor for use as a simplified cluster resource manager (CRM) to facilitate highly available NFS exports backed by DRBD

- LINSTOR Gateway to manage highly available iSCSI targets, NFS exports, and NVMe-oF targets by using LINSTOR and DRBD Reactor

LINSTOR Gateway gives your LINSTOR cluster an additional array of storage options. For example, we recently worked with a customer to deploy LINSTOR with Proxmox, but with a twist. Not only was LINSTOR providing highly available storage for their Proxmox VMs, LINSTOR Gateway allowed them to simultaneously provide storage to their VMware cluster via iSCSI.

If you have questions about how LINSTOR Gateway can make an impact in your environment, or if you want to let us know about how you are using it, reach out to us.

Changelog

2026-02-16:

- The LINSTOR Gateway server REST API port changed from 8080 to 8337 with release v2.0.0. See the LINSTOR Gateway GitHub project for details.

2024-06-20:

- Originally published post.