Hybrid cloud strategies are critical for businesses seeking flexibility, cost efficiency, and robust disaster recovery (DR) solutions. By leveraging both on-premise data centers and cloud platforms such as AWS, organizations can design systems to meet specific workload demands, while remaining nimble should demand, or disaster, require a change of action. This article will discuss some of the aspects of a hybrid cloud architecture that uses AWS and how the LINBIT SDS tool set can help you build a robust hybrid cloud solution with off-site disaster recovery and on-premise high availability (HA), for data critical to your applications and services.

Disaster recovery in hybrid clouds

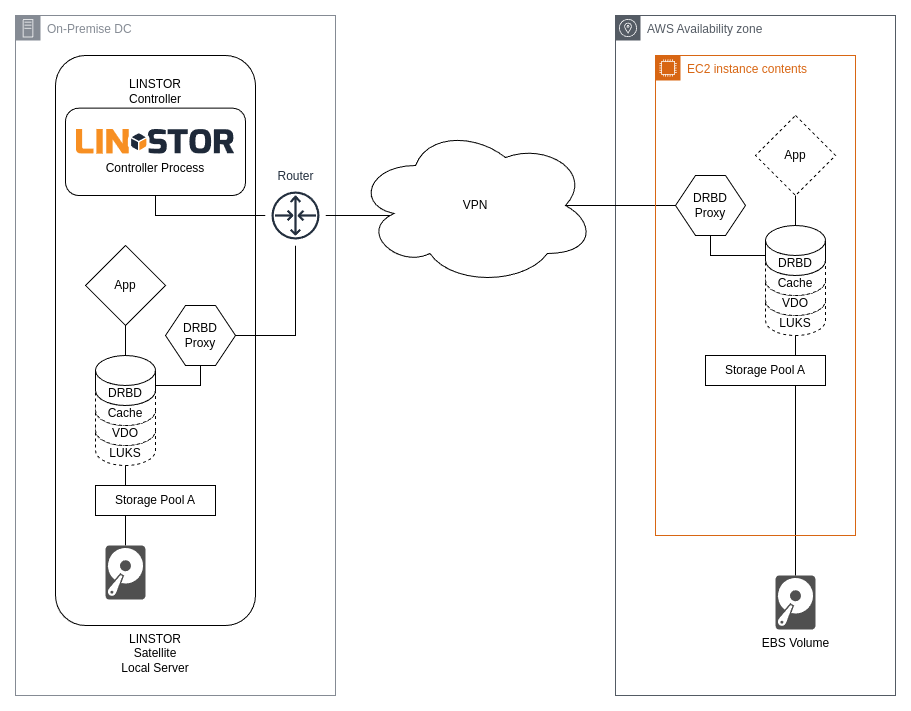

Disaster recovery plays a pivotal role in business continuity, and hybrid clouds ensure that data and operations are protected in case of unexpected disruptions to “either end” of a hybrid infrastructure. LINBIT SDS is an open source software-defined storage solution from LINBIT® that manages various block device layers in Linux systems. LINBIT SDS includes a software-defined storage (SDS) component and a block replication component. The SDS component, LINSTOR®, with its ability to abstract block storage across hybrid environments, allows businesses to create generic, unified, and effective DR plans for any application. The replication component, DRBD®, replicates critical data synchronously or asynchronously in real-time.

DRBD’s native asynchronous replication (protocol A) may be “good enough” for some users’ long-distance replication needs. However, another LINBIT software, DRBD Proxy, which is used in this article’s hybrid cloud architecture, extends the asynchronous data replication capabilities of DRBD even further. DRBD Proxy uses reserved system memory as a replication buffer, making sure that the Linux kernel’s relatively small TCP send-buffers do not fill, and subsequently become a bottleneck to your application’s write performance.

Controlling costs in hybrid clouds

Hybrid cloud environments can also enable organizations to better optimize and control costs. Hybrid clouds let organizations allocate workloads between on-premise infrastructure and cloud services, reducing cloud expenditures where possible while managing the total cost of ownership for on-premise workloads. By balancing the expenses of maintaining on-premise systems with the flexible scaling of cloud services, businesses can optimize costs and ensure efficiency.

Managing different storage platforms between on-premise and cloud environments presents significant challenges, including compatibility issues, inconsistent performance, and the complexity of coordinating data replication and recovery processes across diverse infrastructures. LINBIT SDS abstracts the underlying differences in storage and environment types and provides a unified platform for managing replicated block storage, enabling both HA and DR, between disparate environments with unique storage systems.

Understanding block storage in hybrid clouds

Unlike file storage, which organizes data in a hierarchical structure, or object storage, which packages data with metadata, block storage provides low-level access to data, making it ideal for applications that require high performance, such as databases and virtual machines. The key advantages of block storage over other types of storage include superior performance due to direct I/O operations, flexibility in terms of logical volume management but also layering virtual block device drivers to tailor feature sets, and broad compatibility with almost any application or operating system.

Comparing block storage to other storage types

When compared to file storage systems such as NFS or AWS EFS, which are well-suited for sharing files across multiple clients or instances but introduce latency due to file system locking, block storage such as AWS EBS is much faster and more efficient for high-demand workloads. Object storage solutions such as MinIO or AWS S3 are designed for scalability and distributed environments, but lack the performance and low-latency access needed for transactional applications. While object and file storage offer ease of use and scalability for specific cases, block storage remains the go-to for scenarios where speed, flexibility, and compatibility are critical.

EBS satisfies most users’ needs when in comes to block storage in AWS. However, in a hybrid cloud architecture, users still have to figure out how to manage their local storage. Managing local storage and AWS EBS separately leaves system engineers and architects to figure out how to make data portable between either end of their hybrid cloud. Backup and restore processes are time consuming and introduce consistency concerns. By using LINSTOR to manage both on-premise and cloud block storage, specifically by layering DRBD over the storage, not only do those volumes become portable, the data stored on them gain an additional level of resilience.

Abstracting block storage by using LINSTOR

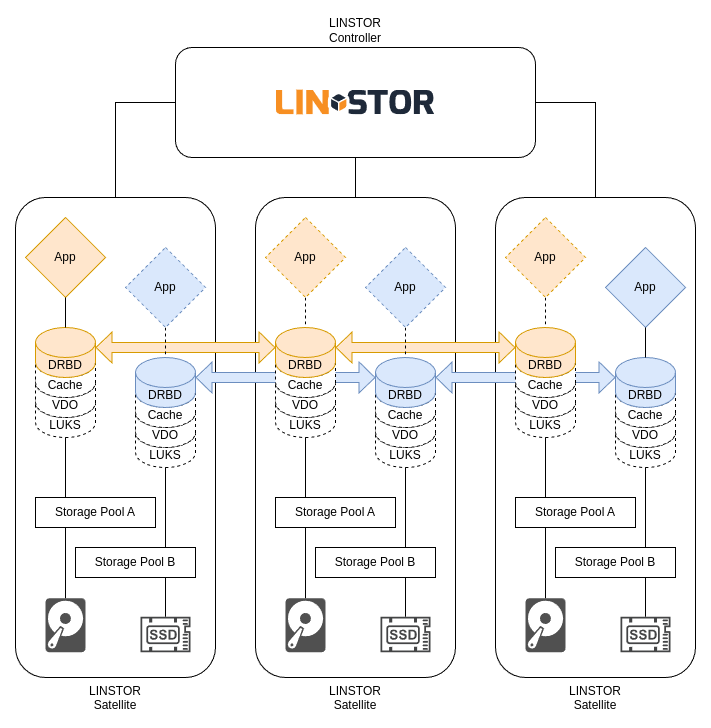

LINBIT SDS manages various open source block storage technologies commonly found in modern Linux distributions to apply enterprise grade features such as encryption at rest, caching, and deduplication to storage volumes across storage clusters. The LINBIT SDS software consists of a single LINSTOR controller service, and usually many LINSTOR satellite service instances running within a cluster of nodes. The node running the LINSTOR controller service is responsible for managing the LINSTOR cluster configurations and orchestrating operations to be carried out on the nodes running a LINSTOR satellite service instance. LINSTOR satellite nodes in the cluster either provide or access LINSTOR managed storage directly. Satellites are also responsible for provisioning logical volumes and layering block storage technologies, as instructed by the user through configurations made against the LINSTOR controller. Most LINBIT SDS users are interested in using LINSTOR to manage DRBD devices, enabling synchronous and asynchronous replication, in their block storage.

Once you’ve added physical devices to a LINBIT SDS cluster node, whether that node is a physical or virtual server in your local data center or a virtual server in the AWS cloud, management of the device and any logical volumes created from it are now handled from a single unified management plane – the LINSTOR controller node. The same is true for monitoring the block storage. LINBIT SDS exposes metrics about the cluster and the storage resources it manages which can be scraped by Prometheus which enables you to monitor storage across a hybrid cloud environment in a uniform manner. The LINBIT GUI for LINSTOR also offers users a single-pane of glass for observing and managing storage resources in the cluster.

Implementing LINSTOR in AWS for hybrid clouds

Setting up LINSTOR for an on-premise and AWS hybrid cloud could be approached in different ways depending on your organizational goals. To maximize portability and flexibility, I will take an approach in this article that stretches the LINSTOR cluster and its DRBD devices between the on-premise and AWS sides of an example hybrid cloud. This is often called a “stretched cluster” architecture. LINSTOR will configure DRBD Proxy to enable long distance asynchronous replication between the on-premise and AWS hosted LINSTOR satellites. The described architecture will look like this:

LINBIT SDS needs an unused block device, network connectivity between cluster nodes, and the LINBIT SDS software stack installed to enable block replication in a hybrid cloud environment. CPU and memory requirements are relatively low when compared to other popular software-defined storage solutions. In this example, Wireguard VPN is used between cluster nodes for DRBD connectivity, however, AWS Site-to-Site VPN connections are recommended when possible.

AWS cloud instance details

The EC2 instances selected were t3.small instances, with an additional EBS volume attached for the LINSTOR storage pool. The t3.small instance has two CPU cores, 2GiB of memory, and the additional EBS volume is 16 GiB. The official Ubuntu 24.04 Amazon Machine Image (AMI) was used as the base for the instance.

Assuming a LINSTOR controller node is in the on-premise data center, the AWS security group will have the following rules applied, where x.x.x.x/32 is the IP address of your on-premise Internet gateway:

| Purpose | Rule Type | Protocol | From Port | To Port | Permitted CIDR Blocks |

|---|---|---|---|---|---|

| SSH | Ingress | TCP | 22 | 22 | x.x.x.x/32 |

| Wireguard VPN | Ingress | TCP | 51820 | 51820 | x.x.x.x/32 |

| DRBD Port Range | Ingress | TCP | 7000 | 7999 | x.x.x.x/32 |

| LINSTOR Satellite | Ingress | TCP | 3366 | 3367 | x.x.x.x/32 |

| Web App | Ingress | TCP | 8080 | 8080 | x.x.x.x/32 |

| Everything Out | Egress | All (-1) | 0 | 0 | 0.0.0.0/0 |

TIP: Because the example in this blog uses VPN addresses for LINSTOR and DRBD connectivity, the DRBD port range and LINSTOR satellite ports are not technically required.

The following Terraform module, which describes the infrastructure as code, was used to create the AWS cloud hosted LINSTOR satellite:

provider "aws" {

region = "us-west-2"

}

resource "aws_instance" "drbd_dr" {

ami = "ami-05134c8ef96964280" # AMI for Ubuntu 24.04 in us-west-2

instance_type = "t3.small"

key_name = "aws-keypair"

vpc_security_group_ids = [aws_security_group.drbd_dr.id]

root_block_device {

volume_size = 40

encrypted = true

}

ebs_block_device {

device_name = "/dev/nvme1n1"

volume_type = "standard"

volume_size = 16

encrypted = true

}

tags = {

Name = "terraform-drbd-dr"

}

}

resource "aws_security_group" "drbd_dr" {

name = "terraform-drbd-dr"

ingress {

from_port = 8080

to_port = 8080

protocol = "tcp"

cidr_blocks = ["x.x.x.x/32"]

}

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["x.x.x.x/32"]

}

ingress {

from_port = 51820

to_port = 51820

protocol = "tcp"

cidr_blocks = ["x.x.x.x/32"]

}

ingress {

from_port = 7000

to_port = 7999

protocol = "tcp"

cidr_blocks = ["x.x.x.x/32"]

}

ingress {

from_port = 3366

to_port = 3367

protocol = "tcp"

cidr_blocks = ["x.x.x.x/32"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

output "public_ip" {

value = aws_instance.drbd_dr.public_ip

description = "The public IP address of the AWS hosted instance"

}

On the AWS hosted EC2 instance, the linbit-manage-node.py node registration and repository configuration Python script was used to configure the LINBIT customer repositories. You must have an active customer or evaluation account with LINBIT in order to access LINBIT repositories. If you’re not a current customer, or do not have an active evaluation account, you can reach out to LINBIT directly to establish access. After which, the LINBIT SDS stack packages were installed:

# curl -LO my.linbit.com/linbit-manage-node.py

# python3 linbit-manage-node.py

# apt update

# apt install drbd-dkms drbd-utils linstor-satellite drbd-proxy

Create an LVM volume group to be used as the storage provider for the LINSTOR storage pool:

# vgcreate drbdpool /dev/nvme1n1

LINSTOR will create logical volumes to back the DRBD devices it creates and configures within this volume group.

On-premise instance details

The on-premise instance was created using Vagrant, to roughly match the configuration and size of the EC2 instance. A Ubuntu 24.04 image was used to create a 2-CPU VM with 2GiB of memory, and an additional 16 GiB volume for the LINSTOR storage pool.

The following Vagrantfile, which describes the infrastructure as code, was used to create the on-premise LINSTOR controller node:

Vagrant.require_version ">= 2.2.8"

ENV['VAGRANT_EXPERIMENTAL'] = "disks"

BOX = 'generic/ubuntu2404'

Vagrant.configure("2") do |config|

config.vm.provider "libvirt"

VM_CPUS = 2

VM_MEMORY = 2048

DISK_SIZE = 16 # in GB

NETWORK = "192.168.221.170"

config.vm.define "linbit-local" do |linbit|

config.vm.box = "#{BOX}"

config.ssh.insert_key = false

linbit.vm.network "private_network", ip: "#{NETWORK}", network_name: "management", created: false

# Add extra disk using new "experimental" disks feature (vagrant >= 2.2.8)

linbit.vm.disk :disk, size: "#{DISK_SIZE}GB", name: "sat_disk"

linbit.vm.provider "libvirt" do |l|

l.cpus=VM_CPUS

l.memory=VM_MEMORY

l.disk_driver :cache => 'unsafe'

l.storage :file, :cache => 'unsafe', :size => "#{DISK_SIZE}G"

end

end

end

On the on-premise instance, the linbit-manage-node.py node registration and repository configuration Python script was used to configure LINBIT customer repositories. After this, the LINBIT SDS stack packages were installed by using the following commands:

# curl -LO my.linbit.com/linbit-manage-node.py

# python3 linbit-manage-node.py

# apt update

# apt install drbd-dkms drbd-utils linstor-satellite linstor-controller linstor-client drbd-proxy

Similar to configuration done on the AWS side, create an LVM volume group to be used as the storage provider for the LINSTOR storage pool:

# vgcreate drbdpool /dev/nvme1n1

LINSTOR will create logical volumes to back the DRBD devices it creates and configures within this volume group.

Configuring the LINSTOR cluster

You can now configure the LINSTOR cluster to support creating long-distance replicated block devices for use in a hybrid cloud environment.

To begin configuring the LINSTOR cluster, you need to add the nodes to the cluster. You can do this by entering LINSTOR client commands from the LINSTOR controller node:

# linstor node create linbit-dr 10.0.0.2

# linstor node create --node-type combined linbit-local 10.0.0.1

# linstor node list

╭───────────────────────────────────────────────────────────╮

┊ Node ┊ NodeType ┊ Addresses ┊ State ┊

╞═══════════════════════════════════════════════════════════╡

┊ linbit-dr ┊ SATELLITE ┊ 10.0.0.2:3366 (PLAIN) ┊ Online ┊

┊ linbit-local ┊ COMBINED ┊ 10.0.0.1:3366 (PLAIN) ┊ Online ┊

╰───────────────────────────────────────────────────────────╯

NOTE: The

linbit-localnode is added as acombinedtype node because it serves as both a LINSTOR controller and satellite node.

After creating the LINSTOR nodes, you can create the LINSTOR storage pool. This example uses an LVM volume group named drbdpool to create a LINSTOR storage pool named lvm-pool on both nodes:

# linstor storage-pool create lvm linbit-dr lvm-pool drbdpool

# linstor storage-pool create lvm linbit-local lvm-pool drbdpool

# linstor storage-pool list --storage-pools lvm-pool

╭──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╮

┊ StoragePool ┊ Node ┊ Driver ┊ PoolName ┊ FreeCapacity ┊ TotalCapacity ┊ CanSnapshots ┊ State ┊ SharedName ┊

╞══════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════╡

┊ lvm-pool ┊ linbit-dr ┊ LVM ┊ drbdpool ┊ 16.00 GiB ┊ 16.00 GiB ┊ False ┊ Ok ┊ linbit-dr;lvm-pool ┊

┊ lvm-pool ┊ linbit-local ┊ LVM ┊ drbdpool ┊ 16.00 GiB ┊ 16.00 GiB ┊ False ┊ Ok ┊ linbit-local;lvm-pool ┊

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

Next, set the Site properties on the LINSTOR nodes and the DrbdProxy/AutoEnable property on the LINSTOR controller to ensure that DRBD devices created by LINSTOR are configured to use DRBD Proxy when replicas are assigned to nodes in different sites.

# linstor node set-property linbit-dr Site aws

# linstor node set-property linbit-local Site local

# linstor controller set-property DrbdProxy/AutoEnable true

Finally, use a combination of auxiliary node properties and resource group options to ensure that when resources are created from the dr-group resource group, LINSTOR creates exactly two replicas of the volume, with one replica in each data center.

# linstor node set-property --aux linbit-dr dc=aws

# linstor node set-property --aux linbit-local dc=local

# linstor resource-group create --storage-pool=lvm-pool --place-count=2 --replicas-on-different=dc dr-group

With this LINSTOR configuration, hybrid cloud volumes asynchronously replicated between the on-premise and AWS-hosted nodes are easily created using a single command:

# linstor resource-group spawn dr-group dr-res-0 5G

You can show the resources in your LINSTOR cluster by entering the following command:

# linstor resource list

Output will show the dr-res-0 resource on your on-premise and AWS nodes:

╭───────────────────────────────────────────────────────────────────────────────────────────────╮

┊ ResourceName ┊ Node ┊ Port ┊ Usage ┊ Conns ┊ State ┊ CreatedOn ┊

╞═══════════════════════════════════════════════════════════════════════════════════════════════╡

┊ dr-res-0 ┊ linbit-dr ┊ 7000 ┊ Unused ┊ Ok ┊ UpToDate ┊ 2024-09-12 19:49:54 ┊

┊ dr-res-0 ┊ linbit-local ┊ 7000 ┊ Unused ┊ Ok ┊ SyncTarget(0.78%) ┊ 2024-09-12 19:49:53 ┊

╰───────────────────────────────────────────────────────────────────────────────────────────────╯

NOTE: Once the resource becomes fully synchronized between nodes the

Statewill show asUpToDateon both nodes. At that point the DRBD device is a bit-for-bit exact replica.

DRBD version 9 has an auto-promote feature enabled by default. This allows users to access the DRBD device without explicitly promoting the device to the Primary role first. Provided that no other peers are holding the DRBD device open, the DRBD device can be automatically promoted upon access request. For example, when creating a file system, you do not have to explicitly promote the DRBD device to primary, and after the file system is created, you can simply mount the DRBD device which will automatically promote the device and hold it open preventing it from being promoted elsewhere in the cluster.

# drbdadm status

dr-res-0 role:Secondary

disk:UpToDate

linbit-local role:Secondary

peer-disk:UpToDate

# mkfs.ext4 /dev/drbd1000

# mkdir -p /mnt/data

# mount /dev/drbd1000 /mnt/data

# drbdadm status

dr-res-0 role:Primary

disk:UpToDate

linbit-local role:Secondary

peer-disk:UpToDate

Unmounting the DRBD device releases the device, which results in its demotion to the Secondary role, allowing it to be promoted to Primary elsewhere in the cluster where the file system could then be accessed.

Hybrid cloud application migration process

Using LINBIT SDS as an abstraction layer for block storage in a hybrid cloud environment allows for quick migrations of applications between the on-premise and AWS sides of your cloud. Because data is replicated in real-time, the recovery point objective (RPO) and recovery time objectives (RTO) can be held to their absolute minimums.

Using the example LINSTOR resource, dr-res-0, created in the previous section and some fictitious Python based web-app, moving that application is straightforward without losing any persistent data. The following is an example pattern that demonstrates such a migration.

The web application will start on the local node and then you will migrate it to the AWS-hosted node. First, create some dummy data and use Python to serve that as a webpage to clients:

# mkdir -p /mnt/data

# mount /dev/drbd1000 /mnt/data

# cd /mnt/data

# echo "hello world" > index.html

# echo "linbit-local log sha1sum: $(sha1sum /var/log/syslog)" >> index.html

# python3 -m http.server 8080

Serving HTTP on 0.0.0.0 port 8080 (http://0.0.0.0:8080/) ...

From a separate client system, you can request the webpage being served from the local node’s application:

$ curl linbit-local:8080

hello world

linbit-local log sha1sum: 83363daf5331f386740f572fd3bcbbf4c9057ffb /var/log/syslog

To begin moving this “web app” to the DR node hosted in AWS, stop the web server (Ctrl+c) and unmount the DRBD device on the local node:

# python3 -m http.server 8080

Serving HTTP on 0.0.0.0 port 8080 (http://0.0.0.0:8080/) ...

192.168.111.105 - - [08/Oct/2024 06:02:02] "GET / HTTP/1.1" 200 -

^C

Keyboard interrupt received, exiting.

# cd ~/

# umount /mnt/data

To start the application on the AWS-hosted node, thanks to the DRBD auto-promote feature, you only need to mount the device, and start the web app. The data that was written to the device while the application was running on the local node is still there, and any new data written while the application is running on the AWS hosted node is replicated back to the local node:

# mount /dev/drbd1000 /mnt/data

# cd /mnt/data

# echo "linbit-dr log sha1sum: $(sha1sum /var/log/syslog)" >> index.html

# python3 -m http.server 8080

Serving HTTP on 0.0.0.0 port 8080 (http://0.0.0.0:8080/) ...

Again, from a separate client system, you can request the webpage which is now being served from the AWS-hosted node:

$ curl 44.243.30.35:8080

hello world

linbit-local log sha1sum: 83363daf5331f386740f572fd3bcbbf4c9057ffb /var/log/syslog

linbit-dr log sha1sum: 65f19f17bc14d272058ab739bce3b2d95eada746 /var/log/syslog

Here, output will show the original data from when the application was running locally, and the new data added from the AWS-hosted node. This example is simple, but it should demonstrate the portability of applications by using LINBIT SDS as an abstraction layer over their on-premise and AWS EBS storage. For real-life applications, you might use an AWS Elastic Load Balancer (ELB) or Route53 to route client traffic to your application regardless of where it is active.

Conclusion

In this blog post, I hopefully demonstrated how LINBIT SDS can simplify disaster recovery (DR) by abstracting block storage across on-premise and AWS environments. This flexibility allows seamless migration and application portability, ensuring that data is consistently available and synchronized between locations. By leveraging LINBIT SDS, organizations can reduce the complexity of DR planning and enhance their resilience in hybrid cloud environments.

If you’re looking to optimize your DR strategy with a reliable and efficient hybrid cloud solution, consider exploring LINBIT SDS for your own infrastructure. For more information, check out LINBIT documentation and web resources to learn how LINBIT SDS can support your cloud storage and disaster recovery needs.

Additional Resources

- Links to LINBIT SDS Documentation and How-to Guides

- How-to Guide and Reference Architecture for Using LINBIT SDS in EKS.

- Automating Failovers in Hybrid Clouds Using DRBD, Pacemaker, and Booth.

- Other AWS and LINBIT Guides and Blogs

- Case Studies and Success Stories

- Netflix Billing Migration to AWS by using DRBD.

- Payliance HA Payment Processing in AWS by using DRBD.

- Contact Information for Further Assistance

- LINBIT offers direct support and consultation services where you can reach out for personalized advice and technical support.

- Inquire through their contact page.