Much has changed in how LINSTOR® is typically deployed into Kubernetes clusters over the years. I’m referring to the LINSTOR Operator for Kubernetes which is now at a v2 release, but the CSI plugin is still the bridge between Kubernetes and LINSTOR’s replicated block storage. While LINBIT® encourages its customers and users to employ the LINSTOR Operator for deploying containerized LINSTOR clusters into Kubernetes, it’s possible to run LINSTOR outside of Kubernetes with only the LINSTOR CSI plugin components running in Kubernetes.

There is plenty of documentation around how the LINSTOR Operator can help you easily deploy LINSTOR in Kubernetes, however “CSI only” instructions aren’t discussed much outside of the CSI plugin’s GitHub repository. This blog post sheds light on this alternative architecture for when an administrator wants to deploy LINSTOR outside Kubernetes and integrate it with Kubernetes via the CSI plugin.

Prerequisites and Assumptions

To keep this blog as concise as possible, I’ll assume you already have done the following:

- Created and initialized a LINSTOR cluster

- Created a storage pool within LINSTOR

- Created and initialized a Kubernetes cluster

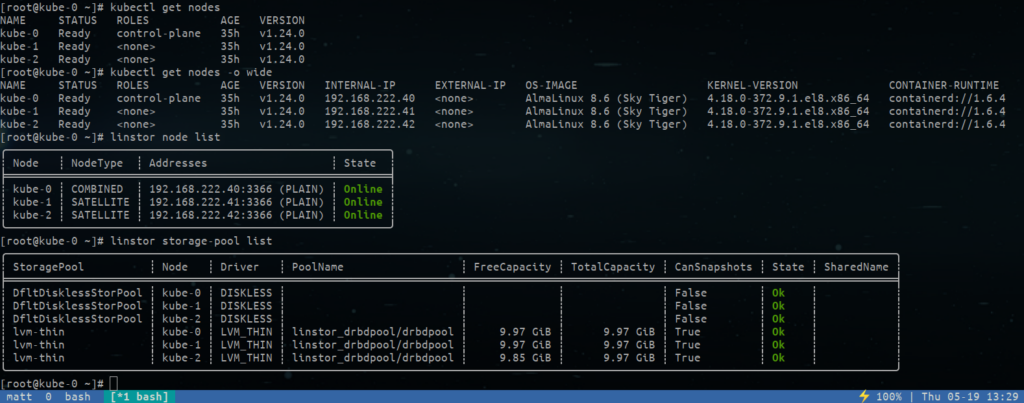

There are many options and paths available to satisfy the prerequisites above. I’ll assume that you created a 3-node LINSTOR cluster on the same nodes that you created and initialized the Kubernetes cluster on. I’ll also assume the LINSTOR storage pool that you made was an LVM_THIN type named lvm-thin, although it is possible to use a storage pool backed by ZFS.

Preparing and Installing the LINSTOR CSI Plugin

Once you’ve initialized your Kubernetes and LINSTOR clusters, you can deploy the LINSTOR CSI plugin configuration into Kubernetes.

Entering the command below will download an example configuration from the LINSTOR CSI plugin’s GitHub repository. Replace every instance of the placeholder LINSTOR_CONTROLLER_URL string in the example, and apply the configuration to your Kubernetes cluster. Set the LINSTOR_CONTROLLER_URL string to the URL, including the DNS name or IP address and TCP port number, that points to your LINSTOR controller node’s REST API. By default, the LINSTOR controller node’s endpoint can be accessed using HTTP on port 3370. For a LINSTOR controller node with a DNS name of linstor-controller.example.com, the LINSTOR controller node’s REST API endpoint would be http://linstor-controller.example.com:3370. Therefore, you could use the following commands to update the appropriate string in the example configuration and deploy the LINSTOR CSI driver into Kubernetes.

$ LINSTOR_CONTROLLER_URL=http://linstor-controller.example.com:3370

$ kubectl kustomize http://github.com/piraeusdatastore/linstor-csi/examples/k8s/deploy \

| sed "s#LINSTOR_CONTROLLER_URL#$LINSTOR_CONTROLLER_URL#" \

| kubectl apply --server-side -f -

💡 TIP: If you’ve configured your LINSTOR controller service for High Availability using DRBD Reactor, you should use the virtual IP address for your LINSTOR endpoint.

Shortly after, you should see your LINSTOR CSI plugin pods running in the piraeus-datastore namespace.

$ kubectl get pods --all-namespaces

Defining Kubernetes Storage Classes for LINSTOR

With your LINSTOR cluster integrated into Kubernetes, you can now define how you’d like LINSTOR’s persistent volumes to be provisioned by Kubernetes users. This is done by using Kubernetes storage classes and the LINSTOR parameters specified on each.

LINSTOR storage classes, in their simplest form, contain the LINSTOR storage pool’s name and the number of replicas that should be created in LINSTOR for each persistent volume. The following example storage class definitions will create three separate storage classes defining 1, 2, and 3 replicas from the lvm-thin LINSTOR storage-pool.

$ cat << EOF > linstor-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: "linstor-csi-lvm-thin-r1"

provisioner: linstor.csi.linbit.com

parameters:

linstor.csi.linbit.com/autoPlace: "1"

linstor.csi.linbit.com/storagePool: "lvm-thin"

reclaimPolicy: Delete

allowVolumeExpansion: true

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: "linstor-csi-lvm-thin-r2"

provisioner: linstor.csi.linbit.com

parameters:

linstor.csi.linbit.com/autoPlace: "2"

linstor.csi.linbit.com/storagePool: "lvm-thin"

reclaimPolicy: Delete

allowVolumeExpansion: true

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: "linstor-csi-lvm-thin-r3"

provisioner: linstor.csi.linbit.com

parameters:

linstor.csi.linbit.com/autoPlace: "3"

linstor.csi.linbit.com/storagePool: "lvm-thin"

reclaimPolicy: Delete

allowVolumeExpansion: true

EOF

Finally, create the LINSTOR storage classes using kubectl.

$ kubectl apply -f linstor-sc.yaml

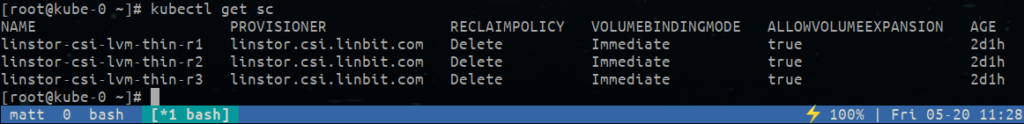

After applying this configuration, you will have three new storage classes defined in Kubernetes.

Create a PVC and Pod for Testing your Deployment

To test your deployment, define and create a PVC in Kubernetes specifying one of the three storage classes created in the section above. The PVC manifest below uses the three replica stroageClass:

$ cat << EOF > linstor-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: demo-vol-claim-0

spec:

storageClassName: linstor-csi-lvm-thin-r3

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4G

EOF

$ kubectl apply -f linstor-pvc.yaml

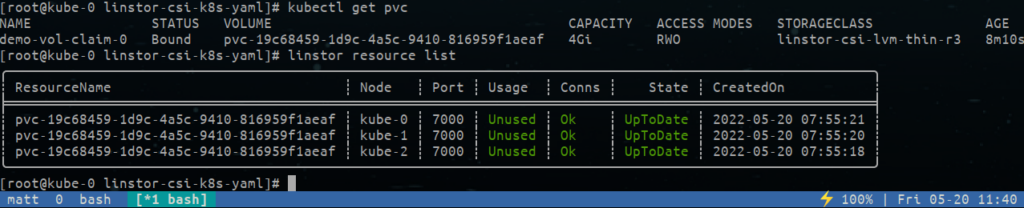

After applying the configuration, you will have a LINSTOR provisioned PVC in Kubernetes and a new, unused resource in LINSTOR.

Next, define and deploy a pod that will use the LINSTOR-backed PVC.

$ cat << EOF > demo-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: demo-pod-0

namespace: default

spec:

containers:

- name: demo-pod-0

image: busybox

command: ["/bin/sh"]

args: ["-c", "while true; do sleep 1000s; done"]

volumeMounts:

- mountPath: "/data"

name: demo-vol

volumes:

- name: demo-vol

persistentVolumeClaim:

claimName: demo-vol-claim-0

EOF

$ kubectl apply -f demo-pod.yaml

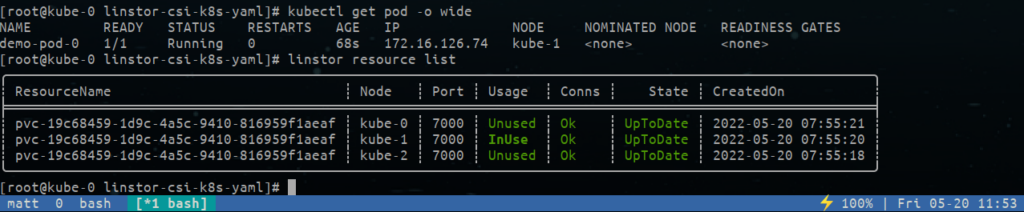

Shortly after deploying, you will have a running pod and your LINSTOR resource listed as InUse.

Hopefully, you’ve found this information helpful in your quest to deploy LINSTOR outside of Kubernetes. Again, if you’re new to LINSTOR in Kubernetes, LINBIT recommends starting your testing using the LINSTOR Operator for Kubernetes. If you have any questions about either approach, LINBIT is always available to talk with their users and customers – all you have to do is reach out.

Changelog

2022-06-02:

- Originally published post.

2024-08-07:

- Tech review and update.