Introduction

As the developers of DRBD®, LINBIT® has led the way in high availability since 2001. DRBD has been included in the Linux kernel since version 2.6.33 (2009), has been deployed on all major Linux distributions, and is fully compatible with systems, applications, devices, and services. LINBIT specializes in block level replication, high availability, disaster recovery, and software defined storage.

Objective

In this blog post we’ll explore several options for providing storage across the network along with benchmark results. We will test replicated, high-performance, disk partitions that can be consumed by various virtualization platforms such as VMware, Hyper-V, XEN, etc. Access to the storage can be remote (drbd-diskless/iSCSI) or direct. While iSCSI has historically been the go-to for network attached storage, we will show data that supports there is a better way; using DRBD in diskless mode.

Infrastructure & Methodology

a.Infrastructure

The infrastructure consists of 3 servers and 1 x 10 Gigabit Switch. We divide the servers into two roles – storage and consumer. Two servers are positioned as disaggregated storage and one server as consumer. CAT8 cables and a 10 Gigabit Switch are used to prevent network bottlenecks in performance between servers.

The server names: Node1, Node2 and USAP1.

All servers use RHEL 8.2 and are running kernel version: 4.18.0-193.19.1.el8_2.x86_64.

8 NVMe disks on Node1 and Node2 servers were divided into 2 parts using mkpart. The first disk was created as a Volume Group with LVM without using VDO (Virtual Data Optimizer) with the first parts. With the second part, the VDO layer was created, then the Volume Group was created using LVM. VDO is below the LVM layer. LINSTOR® is used to create, delete, or modify the volumes of DRBD.

Layer Structure;

First partition: Disk -> LVM -> DRBD Resource (Stripped across the disks)

Second partition: Disk -> VDO -> LVM -> DRBD Resource (Stripped across the disks)

LINSTOR and DRBD versions installed to all servers are as follows: (DRBD versions were replaced during tests)

· LINSTOR – 1.4.0

· DRBD – 9.0.24

· DRBD – 9.0.25

· DRBD – 9.1a4

· drbdadm – 9.0.15

· Pacemaker – 2.0.2

b. Testing Methodology

The test consists of several variables. DRBD version, method of accessing Disk, whether to use Single Thread or Multi Thread, VDO and Encryption, and a tuned DRBD.

In the light of all these values, the following FIO command was run for 5 minutes and a large amount of IO was sent to the disks. We waited 5 minutes between tests to prevent possible load problems. The parameters used in our FIO test are as follows:

RW: Random Write

Block Size: 4K

Thread: 1 for ST, 30 for MT

IO Depth: 128

Example FIO Command: fio –name = randwrite4k –rw = randwrite –bs = 4k –numjobs = 1 –iodepth = 128 –ioengine = libaio –direct = 1 –group_reporting –time_based –runtime = 5M –status-interval = 1M –filename = / dev / drbd1001 –output = / linstor / results / VDO_4k_RW_ST.txt

DRBD Version: There are 3 versions of DRBD in the test suite – DRBD 9.0.24 (Old version), DRBD 9.0.25 (Stable Current Version), and DRBD 9.1a4 (Beta & Under Development)

Performance Tuning: In our tests, we aimed to increase performance by tuning DRBD configurations in the same way in every scenario. While it has been useful in some cases, it has proved disadvantageous in other cases. Our improvements in this regard are still progressing through additional development efforts. Below you can see the tuning parameters.

· al-extents 8000

· disk-flush no

· md-flush no

· max-buffers 10000

Accessing The Disks: There are 3 ways to access disks. Local (testing disks on Node1 locally via node1), drbd-diskless (testing disks on node1 or node2 over USAP1 with DRBD protocol), and ISCSI (accessing disks on Node1 and Node2 with ISCSI protocol over USAP1)

Thread Count: All scenarios are tested in Single and Multi Thread.

Deduplication: VDO is used for the deduplication layer in RHEL. It performs best when it is below the LVM layer and thus,the testing suite is following this recommendation.

Encryption: We attempted to use encryption in the tests. However, despite our efforts to utilize RHEL 8.2 and various kernels we were unsuccessful in getting it to work. While encryption was activated on the disks, processes were hung in the kernel. We will open a bug report with RedHat on this issue and follow up.

Learning & Recommendations

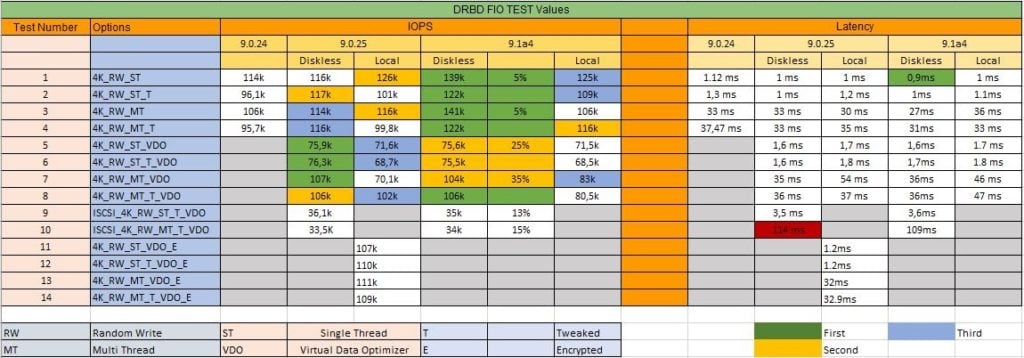

All obtained values and test results are represented in the table below.

What We Learned

· DRBD 9.1 is faster than the DRBD 9 version in multiple scenarios. DRBD 9.0.24 is the slowest of all versions.

· The difference between the versions in “local disk tests” is approximately 10%.

· DRBD version 9.0.25 stands out as the most successful in local disk tests.

· When VDO is added to a Single Thread environment, performance losses reach 50%. However, when VDO is added to a Multithreaded environment, the loss is reduced tot 25%.

· Due to the limits in ISCSI protocols, single resource performance cannot exceed 35K values.

· Due to ISCSI limits, no matter which DRBD version is used, the performance cannot exceed a certain value. DRBD performance goes far beyond what ISCSI can offer.

· DRBD-Diskless mode stands out as the most efficient option (including local disks) among all tests. 141k IOPS, which is the highest IOPS value received from a single resource, are captured with DRBD 9.1 and DRBD-Diskless (Accessing the resource from a different machine via DRBD protocol).

· When using Multithreading, latency increases by approximately 1 ms per thread.

Resource Consumption

While replicating between scenarios in our tests, we examined the resource consumption on storage nodes. In all tests performed, there was no effect on memory.

Regarding CPU Usage:

In Test 1 and Test 3 Diskless mode, without VDO, DRBD produces 141K IOPS while consuming only 5% CPU.When we do the same tests on local disks, we see 7% CPU consumption. This shows us that it is more advantageous to use diskless mode instead of local disks, because the difference in IOPS between the two modes is approximately 2%.

When VDO is activated under the same conditions, the CPU consumption is 25% with Single Thread, while it is around 35% with Multi Thread. It is clear that the difference is because of the VDO. On the other hand, while ISCSI consumes between 13% and 15% in both Single Thread and Multi Thread modes, it can never exceed 35K IOPS level.

As a result, while the CPU load of DRBD on the servers does not exceed 5% even while getting the best results, the load increases because of calculations when VDO is activated.Conversely, ISCSI cannot approach 141K IOPS and 5% CPU value of DRBD diskless mode even while producing 35K IOPS due to its limitations.

Recommendations & Result

Use case is about consuming replicated disks from remote compute nodes. The result we need to focus here is to find the test in which DRBD behaves best in terms of performance over a remotely accessible protocol. Under these circumstances it is most reasonable to access disks using DRBD 9.1 and drbd-diskless. As clearly seen in the table, the drbd-diskless performs better even in comparison with local disks. Every layer added to the disk is slowing down performance. While the VDO layer causes a loss of approximately 20%, it also creates a load on the CPU. The encryption to be activated in the same way causes some further loss of performance, but drbd-diskless protocol + DRBD 9.1 is able to meet the desired values even with VDO and encryption. In our tests, the iscsi protocol has clearly shown that it cannot be used here.

The maximum performance that can be obtained from each NVME drive is quite high. However, when the DRBD replication intervenes, the value drops 20/1. The reason for this is to control the CPU utilization of DRBD. Therefore, in the scenario to be established, instead of using a single DRBD resource between storages, activating separate DRBD resources for each VM will enable you to make the best use of the entire infrastructure. Especially in recent DRBD developments, serious improvements have been made for using multiple DRBD resources. It is known that around 6000+ resources are used in 1 cluster in one use case. This type of setup will lead you to use your infrastructure more efficiently and effectively in terms of performance.