In this tutorial, I will show you how to use LINBIT SDS to orchestrate DRBD, bcache and ZFS in a stacked block storage layer.

We have three satellite nodes in this demo cluster, all installed with the latest CentOS 8.4, and each node has 1 x NVMe drive and 3 x HDD drives.

Satellite01 192.168.122.41

Satellite02 192.168.122.42

Satellite03 192.168.122.43Install ZFS

Follow the official OpenZFS document to install ZFS first.

dnf install “https://zfsonlinux.org/epel/zfs-release.el8_4.noarch.rpm”DKMS and kmod are both fine options. In this tutorial, we chose kmod to avoid development dependencies installation, and it’s also a little bit faster during ZFS installation.

yum-config-manager —disable zfs

yum-config-manager —enable zfs-kmod

dnf install kmod-zfs zfsInstall bcache

CentOS 8.x and EPEL repositories don’t have bcache RPM packages at this moment, you have to compile by yourself, or simply install them from the LINBIT repository for those with the validated LINBIT credentials.

dnf install kmod-bcache bcache-toolsInstall DRBD

Same as bcache, if you have the validated LINBIT credentials.

dnf install drbd kmod-drbdOptional configuration

By default, the ZFS kernel module will be loaded automatically until at least one zpool exists, or you could manually load the ZFS kernel module on boot.

echo “zfs” > /etc/modules-load.d/zfs.confcache

echo “bcache” > /etc/modules-load.d/bcache.confDRBD

echo “drbd” > /etc/modules-load.d/drbd.conf

echo “drbd_transport_tcp” >> /etc/modules-load.d/drbd.confDouble-check the modules

lsmod | grep -i bcache

lsmod | grep -i zfs

lsmod | grep -i drbdIf you see some outputs for every three commands above on three satellite nodes, then that means you have everything working, congratulations!

Now we are going to set up ZFS, nothing to change in DRBD and bcache for now.

Create zpool(s) on all satellite nodes

zpool create DataPool /dev/sda /dev/sdb /dev/sdc

zpool create CachePool /dev/nvme0n1Let’s look into “LINBIT SDS”

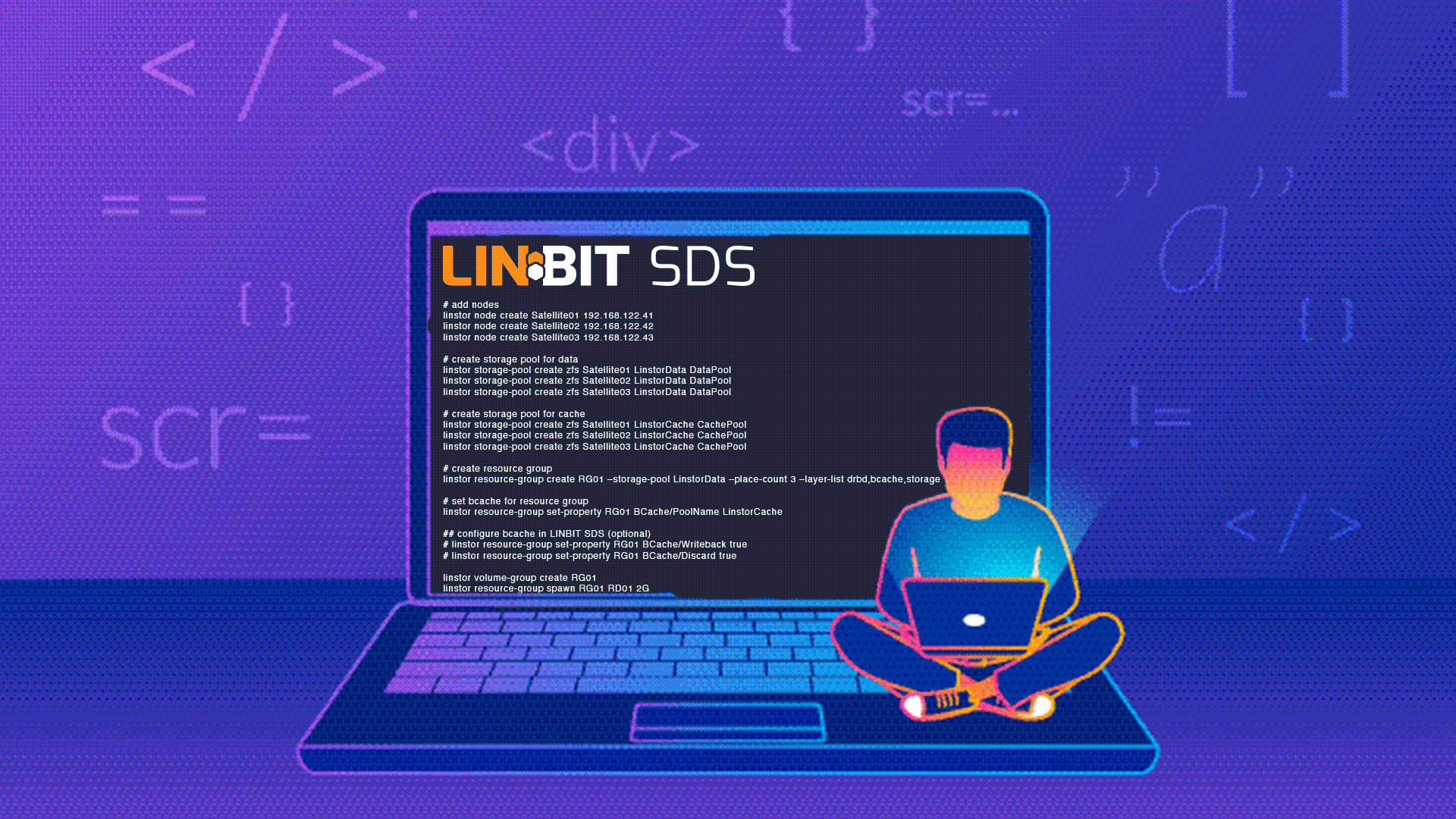

# add nodes

linstor node create Satellite01 192.168.122.41

linstor node create Satellite02 192.168.122.42

linstor node create Satellite03 192.168.122.43

# create storage pool for data

linstor storage-pool create zfs Satellite01 LinstorData DataPool

linstor storage-pool create zfs Satellite02 LinstorData DataPool

linstor storage-pool create zfs Satellite03 LinstorData DataPool

# create storage pool for cache

linstor storage-pool create zfs Satellite01 LinstorCache CachePool

linstor storage-pool create zfs Satellite02 LinstorCache CachePool

linstor storage-pool create zfs Satellite03 LinstorCache CachePool

# create resource group

linstor resource-group create RG01 —storage-pool LinstorData —place-count 3 —layer-list drbd,bcache,storage

# set bcache for resource group

linstor resource-group set-property RG01 BCache/PoolName LinstorCache

## configure bcache in LINBIT SDS (optional)

# linstor resource-group set-property RG01 BCache/Writeback true

# linstor resource-group set-property RG01 BCache/Discard true

linstor volume-group create RG01

linstor resource-group spawn RG01 RD01 2GAll done! RD01 is a stacked block device which is backed by DRBD, bcache and ZFS from top to bottom.

What does that stacked block device mean?

When writing to RD01, DRBD will replicate data to other nodes, and then bcache involves to improve write performance and persistent data on ZFS pools in the end.

Reach out if you want to discuss anything in this blog post. We’re always online and happy to talk.