Introduction

GitLab is a robust DevOps platform used by many organizations and developers. You can easily install GitLab as a self-managed web application. This blog post will guide you through all of the required steps to install and configure a highly available GitLab environment with DRBD® and Pacemaker.

Installation overview

This blog post contains configuration steps tested against the latest Ubuntu LTS release. If you’re not using Ubuntu, the steps below function as a more generalized guide, and you will need to modify some steps accordingly.

To create a highly available GitLab environment, you will need to install and configure the following components on each node:

- GitLab – A web-based DevOps platform. This blog covers installing GitLab Community Edition.

- Pacemaker – A cluster resource management (CRM) framework to manage starting, stopping, monitoring, and ensuring services are available within a cluster.

- Corosync – The cluster messaging layer that Pacemaker uses for communication and membership.

- DRBD – Allows GitLab’s data to be highly available by combining each node’s locally available storage with real time replication over the network. A virtual block device is created that is in turn replicated throughout the entire cluster. The latest version of DRBD 9 is available through our LINBIT PPA.

Node Configuration Overview

| Hostname | LVM Device | Volume Group | DRBD Device | External Interface | External IP | Replication Interface | Replication IP |

|---|---|---|---|---|---|---|---|

| node-a | /dev/vdb | vg_drbd | lv_gitlab | ens7 | 192.168.222.20 | ens8 | 172.16.0.20 |

| node-b | /dev/vdb | vg_drbd | lv_gitlab | ens7 | 192.168.222.21 | ens8 | 172.16.0.21 |

📝 NOTE: A virtual IP address (

192.168.222.200) is required for GitLab web services to bind to.

❗ IMPORTANT: Most of the installation and configuration steps in this blog post will need to be performed on both nodes. Assume each step needs to be performed on both nodes unless stated otherwise.

Firewall configuration

If Ubuntu’s Uncomplicated Firewall (UFW) is used, open the following ports on each node:

| Port | Protocol | Service |

|---|---|---|

| 5403 | TCP | Corosync |

| 5404 | UDP | Corosync |

| 5405 | UDP | Corosync |

| 7788 | TCP | DRBD |

| 80 | TCP | HTTP |

| 443 | TCP | HTTPS |

📝 NOTE: DRBD (by convention) uses TCP port 7788 for the first resource. Any additional resources use an incremented port number.

Run the following ufw commands to open the required ports:

sudo ufw allow 5403/tcp

sudo ufw allow 5404:5405/udp

sudo ufw allow 7788/tcp

sudo ufw allow 80/tcp

sudo ufw allow 443/tcpInstalling DRBD

First, the LINBIT PPA needs to be configured on each node:

sudo add-apt-repository -y ppa:linbit/linbit-drbd9-stack📝 NOTE: The LINBIT PPA is a community repository and might contain release candidate versions of our software. It differs slightly from the repositories available to LINBIT customers. The PPA is intended for testing and evaluation purposes. While LINBIT does not officially support releases in the PPA, feedback and issue reporting are welcome.

Install kernel headers for building the drbd-dkms package:

sudo apt -y install linux-headers-genericInstall DRBD:

sudo apt -y install drbd-dkms drbd-utilsVerify the newly installed DRBD 9 kernel module is loaded by running:

sudo modprobe drbd && modinfo drbdInstalling Pacemaker

To install Pacemaker, Corosync, and other related packages, issue the following command:

sudo apt -y install pacemaker corosync crmsh resource-agents-base resource-agents-extra📝 NOTE: With the release of Ubuntu 24.04,

crmshis no longer installed as a dependency from thepacemakerpackage. Bothpcsandcrmshcan be used interchangeably to manage Pacemaker clusters.

After installation, both the pacemaker.service and corosync.service systemd services are enabled and running. Verify this by running systemctl status pacemaker corosync.

Installing GitLab

This section contains a streamlined version of steps to install GitLab on both nodes. If you are unfamiliar with GitLab’s installation process, it is strongly suggested that you review the installation documentation before continuing with the rest of this blog post.

💡 TIP: Beyond reviewing the general instructions for installing GitLab, also review GitLab’s documentation for installing GitLab Community Edition on Ubuntu. Some configuration steps are omitted and beyond the scope of this blog post.

⚠ WARNING: GitLab generated notifications rely on either Postfix or Sendmail. As this configuration step is optional and dependent on your environment, it will be omitted for the sake of simplicity.

First, install required system packages:

sudo apt-get install -y curl openssh-server ca-certificates tzdata perlNext, add the GitLab package repository:

curl https://packages.gitlab.com/install/repositories/gitlab/gitlab-ee/script.deb.sh | sudo bashInstall GitLab Community Edition:

sudo apt -y install gitlab-eePrevent GitLab from automatically updating:

sudo apt-mark hold gitlab-eeInitialize GitLab. This entire process is automated, it does not require any user input and might take several minutes:

sudo gitlab-ctl reconfigureOnce the initialization is finished, two separate GitLab instances will be running and can be accessed from each node’s IP address, http://192.168.222.20/ and http://192.168.222.21/.

Take note of the generated GitLab root account passwords on each node:

sudo grep ^Password /etc/gitlab/initial_root_passwordAfter initializing GitLab and verifying it’s running on both nodes, the GitLab service needs to be stopped and disabled before configuring Pacemaker.

sudo systemctl disable --now gitlab-runsvdir.serviceConfiguring DRBD

First, it is necessary to configure a DRBD resource to store GitLab’s underlying data. Logical volumes are often used as backing devices for DRBD resources. Adjust your configuration as necessary according to your environment.

The Logical Volume Manager (LVM) commands used here are shown for your reference. If you use these commands, adjust the particulars such as device name, size, and others, according to your environment:

# sudo pvcreate /dev/vdb

Physical volume "/dev/vdb" successfully created.

# sudo vgcreate vg_drbd /dev/vdb

Volume group "vg_drbd" successfully created

# sudo lvcreate -L 20G -n lv_gitlab vg_drbd

Logical volume "lv_gitlab" created.It is highly recommended that you put your resource configurations in separate resource files residing in /etc/drbd.d, for example, /etc/drbd.d/gitlab.res:

resource gitlab {

protocol C;

device /dev/drbd0;

disk /dev/vg_drbd/lv_gitlab;

meta-disk internal;

on node-a {

address 172.16.0.20:7788;

}

on node-b {

address 172.16.0.21:7788;

}

}💡 TIP: For more detailed instructions regarding initial configuration, please see the “Configuring DRBD” section of the DRBD User’s Guide.

Copy the above resource configuration to both nodes. After the resource files are saved it will be necessary to initialize DRBD.

First, create the metadata for the DRBD resources. This step must be performed on both nodes:

sudo drbdadm create-md gitlabNext, bring the resource up on both nodes:

sudo drbdadm up gitlabThe GitLab DRBD resource should now be in the connected state and Secondary on both nodes. An Inconsistent disk state is expected. Verify the current state of the DRBD resource by running drbdadm status on either node. The output will show the following status information:

gitlab role:Secondary

disk:Inconsistent open:no

node-b role:Secondary

peer-disk:InconsistentDRBD needs to either initiate a full synchronization, or, for brand new resources with no existing data, you can skip an initial synchronization by using the --clear-bitmap option. Run the following command on one node only:

sudo drbdadm --clear-bitmap new-current-uuid gitlab/0📝 NOTE: The

**/0**at the end of the above command is to specify the volume number of the resource. Even though the above example uses a single volume, it is still required to specify a volume number, 0 being the default.

The GitLab DRBD resource should now show the disk as UpToDate on both nodes. Verify the state by entering drbdadm status again. Output from the command will now show:

gitlab role:Secondary

disk:UpToDate open:no

node-a role:Secondary

peer-disk:UpToDateCreating a file system

⚠ WARNING: Pay extreme attention to which node(s) commands will be run on in this section.

Once a DRBD resource has been created and initialized, you can create a file system on top of the new DRBD block device (/dev/drbd0). Create an ext4 file system on node-a only:

sudo mkfs.ext4 /dev/drbd0📝 NOTE: You can use other file systems such as

xfsorbtrfsrather thanext4.

Configuring the GitLab data directory

After the file system has been created you can mount it temporarily to setup the folder structure under /mnt/gitlab.

Create the /mnt/gitlab mount point on both nodes:

sudo mkdir /mnt/gitlabTemporarily mount the new file system on node-a only:

sudo mount /dev/drbd0 /mnt/gitlabMove the original GitLab configuration directories on both nodes:

sudo mv /var/log/gitlab /var/log/gitlab.orig

sudo mv /var/opt/gitlab /var/opt/gitlab.orig

sudo mv /etc/gitlab /etc/gitlab.origCreate new directories for GitLab backed by DRBD on node-a only:

sudo mkdir -p /mnt/gitlab/var/log/gitlab

sudo mkdir -p /mnt/gitlab/var/opt/gitlab

sudo mkdir -p /mnt/gitlab/etc/gitlabUse symbolic links to map GitLab’s original /etc/gitlab, /var/opt/gitlab, and /var/log/gitlab directories to the new replicated file system on both nodes:

sudo ln -s /mnt/gitlab/var/log/gitlab /var/log/gitlab

sudo ln -s /mnt/gitlab/var/opt/gitlab /var/opt/gitlab

sudo ln -s /mnt/gitlab/etc/gitlab /etc/gitlabCopy the original GitLab data and configuration to the new replicated file system on node-a only:

sudo rsync -azpv /var/log/gitlab.orig/* /mnt/gitlab/var/log/gitlab

sudo rsync -azpv /var/opt/gitlab.orig/* /mnt/gitlab/var/opt/gitlab

sudo rsync -azpv /etc/gitlab.orig/* /mnt/gitlab/etc/gitlabFinally, unmount the DRBD backed file system on node-a:

sudo umount /mnt/gitlabGitLab’s data and configuration now resides on top of a file system backed by DRBD and is available on either node. Please note that this “shared” file system can only be actively mounted on one node at a time.

Configuring Corosync

An excellent corosync.conf example file can be found in the appendix of ClusterLabs’ Clusters from Scratch document. It is highly recommended to take advantage of Corosync’s redundant communication rings feature if more than one network is available in the cluster. Enabling redundant ring support is performed by adding the rrp_mode option and defining multiple node addresses.

Temporarily stop Pacemaker and Corosync to configure cluster membership:

sudo systemctl stop pacemaker corosyncRename the corosync.conf file generated during the installation process to corosync.conf.bak:

sudo mv /etc/corosync/corosync.conf{,.bak}Create and edit the /etc/corosync/corosync.conf file, it should look similar to the following on both nodes:

totem {

version: 2

secauth: off

cluster_name: cluster

transport: knet

rrp_mode: passive

}

nodelist {

node {

ring0_addr: 172.16.0.20

ring1_addr: 192.168.222.20

nodeid: 1

name: noda-a

}

node {

ring0_addr: 172.16.0.21

ring1_addr: 192.168.222.21

nodeid: 2

name: node-b

}

}

quorum {

provider: corosync_votequorum

two_node: 1

}

logging {

to_syslog: yes

}Now that Corosync has been configured, you can start Corosync and Pacemaker:

sudo systemctl start corosync pacemakerVerify cluster membership with both nodes listed Online by entering sudo crm status. Output will show something similar to the following:

Cluster Summary:

* Stack: corosync (Pacemaker is running)

* Current DC: noda-a (version 2.1.6-6fdc9deea29) - partition with quorum

* Last updated: Thu Nov 21 04:29:01 2024 on noda-a

* Last change: Thu Nov 21 04:25:55 2024 by hacluster via crmd on noda-a

* 2 nodes configured

* 0 resource instances configured

Node List:

* Online: [ noda-a node-b ]

Full List of Resources:

* No resources📝 NOTE: It can take a few seconds for the nodes to enter an Online state while Pacemaker elects a designated coordinator (DC).

Configuring Pacemaker

This section assumes you are about to configure a highly available GitLab cluster with the following configuration parameters:

- The DRBD resource backing all GitLab data (database, configuration, repositories, uploads, and more) is named

gitlaband corresponds to the virtual block device/dev/drbd0. - The DRBD virtual block device (

/dev/drbd0) holds anext4file system which is to be mounted at/mnt/gitlab. - GitLab will use that file system, and the web server will listen on a dedicated cluster IP address,

192.168.222.200. - GitLab’s Omnibus interface and other related processes are controlled by the

gitlab-runsvdirservice and will now be managed by Pacemaker. To prevent this service from automatically starting during boot, it must be disabled in systemd.

On ONE node only save the following configuration as cib.txt. Make any changes necessary such as changing the node names or virtual IP address:

node 1: noda-a

node 2: node-b

primitive p_drbd_gitlab ocf:linbit:drbd \

params drbd_resource=gitlab \

op start interval=0s timeout=240s \

op stop interval=0s timeout=100s \

op monitor interval="29s" role="Promoted" \

op monitor interval="31s" role="Unpromoted"

ms ms_drbd_gitlab p_drbd_gitlab \

meta promoted-max="1" promoted-node-max="1" \

clone-max="2" clone-node-max="1" \

notify="true"

primitive p_fs_gitlab ocf:heartbeat:Filesystem \

params device="/dev/drbd0" directory="/mnt/gitlab" fstype=ext4 \

op start interval=0 timeout=60s \

op stop interval=0 timeout=60s \

op monitor interval=20 timeout=40s

primitive p_gitlab systemd:gitlab-runsvdir \

op start interval=0s timeout=120s \

op stop interval=0s timeout=120s \

op monitor interval=20s timeout=100s

primitive p_ip_gitlab IPaddr2 \

params ip=192.168.222.200 cidr_netmask=24 \

op start interval=0s timeout=20s \

op stop interval=0s timeout=20s \

op monitor interval=20s timeout=20s

group g_gitlab p_fs_gitlab p_ip_gitlab p_gitlab

colocation c_gitlab_on_drbd inf: g_gitlab ms_drbd_gitlab:Promoted

order o_drbd_before_gitlab ms_drbd_gitlab:promote g_gitlab:start

property cib-bootstrap-options: \

have-watchdog=false \

cluster-infrastructure=corosync \

cluster-name=gitlab \

stonith-enabled=false \

maintenance-mode=true

rsc_defaults rsc-options: \

resource-stickiness=200On ONE node only load the new Pacemaker configuration:

sudo crm configure load replace cib.txtYou can ignore the following warning. For simplicity, this article does not show configuring fencing.

WARNING: (unpack_config) warning: Blind faith: not fencing unseen nodes❗ IMPORTANT: Without fencing, a two-node cluster runs the risk of a split-brain occurring. While split-brains can be manually recovered from, they can lead to extra downtime, and in the worst case, potential data loss.

At this point, Pacemaker does not immediately start resources in the cluster because of the property maintenance-mode=true.

Restart Pacemaker on both nodes one last time:

sudo systemctl restart pacemaker📝 NOTE: This fixes the version string from not showing when checking the cluster status (

sudo crm status).

On one node only take the cluster out of maintenance mode.

sudo crm configure property maintenance-mode=falseOnce the cluster exits maintenance mode with its new configuration, Pacemaker will:

- Start DRBD on both nodes.

- Select one node for promoting DRBD from Secondary to Primary.

- Mount the file system, configure the cluster IP address, and start the GitLab server instance on the same node.

- Commence resource monitoring.

Checking cluster status after configuration

Running sudo crm status (from either node) reveals GitLab is running on node-b:

Cluster Summary:

* Stack: corosync (Pacemaker is running)

* Current DC: node-b (version 2.1.6-6fdc9deea29) - partition with quorum

* Last updated: Thu Nov 21 08:14:11 2024 on node-b

* Last change: Thu Nov 21 08:13:38 2024 by root via cibadmin on node-b

* 2 nodes configured

* 5 resource instances configured

Node List:

* Online: [ noda-a node-b ]

Full List of Resources:

* Resource Group: g_gitlab:

* p_fs_gitlab (ocf:heartbeat:Filesystem): Started node-b

* p_ip_gitlab (ocf:heartbeat:IPaddr2): Started node-b

* p_gitlab (systemd:gitlab-runsvdir): Started node-b

* Clone Set: ms_drbd_gitlab [p_drbd_gitlab] (promotable):

* Promoted: [ node-b ]

* Unpromoted: [ noda-a ]Running drbdadm status on node-b shows the GitLab resource is currently Primary:

gitlab role:Primary

disk:UpToDate open:yes

node-a role:Secondary

peer-disk:UpToDateChecking file system mounts on node-b with mount | grep gitlab shows that the replicated file system is currently mounted:

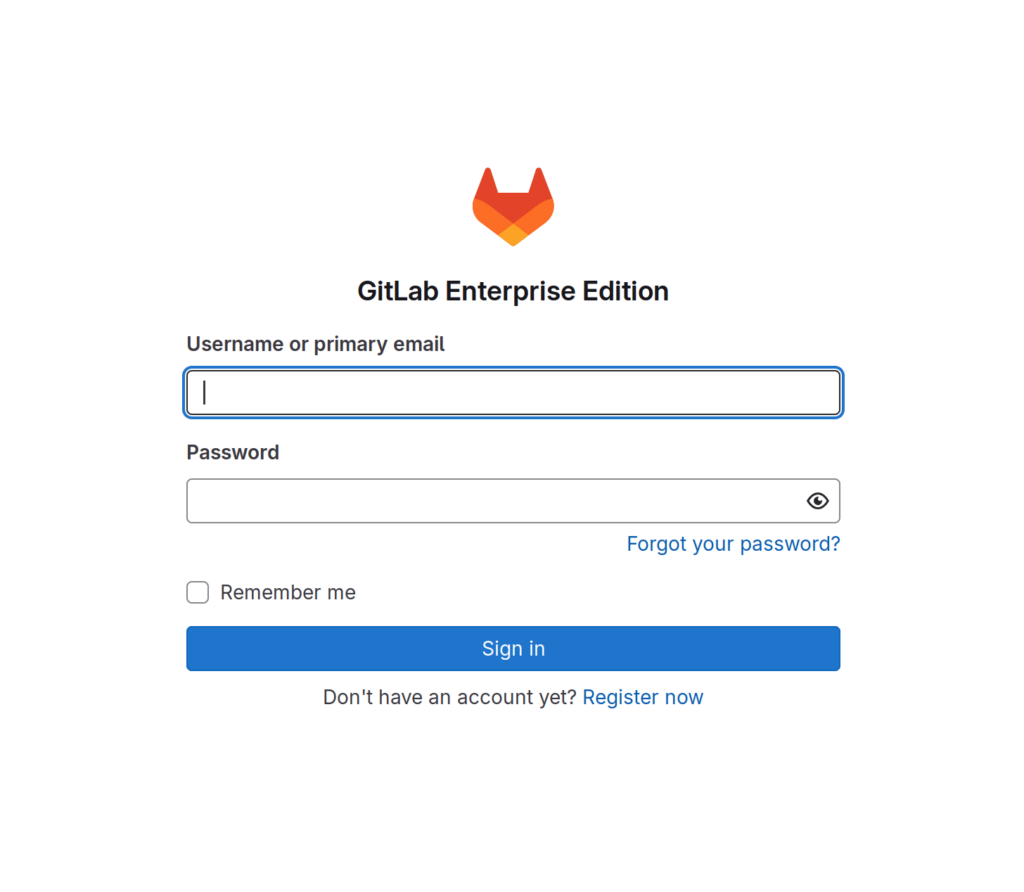

/dev/drbd0 on /mnt/gitlab type ext4 (rw,relatime)Accessing GitLab

The highly available GitLab server instance is now ready for use. You can now access GitLab through the virtual IP, http://192.168.222.200/.

💡 TIP: The default

external_urlvalue ofhttps://gitlab.example.comis defined in/etc/gitlab/gitlab.rb. Defining a DNS record or hosts entry for client machines to resolve to the virtual IP address of192.168.222.200is recommended. If theexternal_urlneeds to be updated, modify the value and rungitlab-ctl reconfigureon the node where GitLab is running.

Failure modes

This section highlights specific failure modes and the cluster’s corresponding reaction to the events.

Node failure

When one cluster node suffers an unexpected outage, the cluster migrates all resources to the other node.

In this scenario Pacemaker moves the failed node from the Online to the Offline state, and starts the affected resources on the surviving peer node.

📝 NOTE: Details of node failure are also explained in chapter 6 of the DRBD User’s Guide.

You should work to resolve the issue that caused the node failure and bring the node back to normal operation. This returns the cluster back to a healthy state.

Storage failure

When the storage backing a DRBD resource fails on the Primary node, DRBD transparently detaches from its backing device, and continues to serve data over the DRBD replication link from its peer node.

📝 NOTE: Details of this functionality are explained in chapter 2 of the DRBD User’s Guide.

You should work to resolve the disk failure or replace the disk on the Primary node to return the cluster to a healthy state.

Service failure

In case of a gitlab-runsvdir.service unexpected shutdown, a segmentation fault, or similar, the monitor operation for the p_gitlab resource detects the failure and restarts the systemd service.

📝 NOTE: The

gitlab-runsvdirsystemd service starts the Omnibus GitLab interface. You can interact with this through thegitlab-ctlfront end. Omnibus is responsible for running essential sub-processes such aspostgresql,nginx,grafana, among others. If a sub-process is killed, Omnibus will attempt to restart it. Runninggitlab-ctl statuswill output all processes managed by Omnibus.

Network failure

If the DRBD replication link fails, DRBD continues to serve data from the Primary node, and re-synchronizes the DRBD resource automatically as soon as network connectivity is restored.

📝 NOTE: Details of this functionality are explained in chapter 2 of the DRBD User’s Guide.

GitLab HA alternatives

This blog post merely demonstrates one solution to achieve a highly available GitLab environment. Using DRBD and Pacemaker can be a cost effective and fairly simplistic option for making any service highly available. For larger and more robust GitLab environments, there are other high availability configurations supported by GitLab. For example:

- GitLab with NFS – GitLab can support multiple instances for scaling and high availability when configured to use NFS for storage. See GitLab’s High Availability Reference Architecture. You can also create highly available NFS clusters by using Pacemaker and DRBD, or by using LINSTOR Gateway which configures LINSTOR, DRBD, and the Pacemaker alternative CRM, DRBD Reactor. See our NFS High Availability Clustering Guide for how-to instructions for using Pacemaker and DRBD to build an HA NFS solution. You can take inspiration from instructions in my blog article, “Highly Available NFS for Proxmox With LINSTOR Gateway”, for how you can use LINSTOR Gateway to achieve HA NFS.

- Omnibus GitLab with external services – GitLab’s Omnibus doesn’t need to manage every bundled service. For example, PostgreSQL, NGINX, and Redis can be all be configured in their own highly available manner.

- GitLab Geo – A non-CE Premium and Ultimate feature only. GitLab Geo allows a replicated GitLab instance to function as a read-only fully operational instance that can be promoted during disaster recovery.

Conclusion

Building a highly available GitLab cluster with DRBD and Pacemaker is a simple and effective solution to ensure your GitLab environment is always up and running.

If you have any questions or need some help with the installation steps above, come stop by the LINBIT forums and join the community, or feel free to reach out to us.

Additional information and resources

- The GitLab project page and Gitlab reference architectures.

- See our Pacemaker Clustering Command Cheatsheet for managing Pacemaker clusters with either

crmshorpcs. - To learn more about DRBD, check out the DRBD User’s Guide.

- Fencing is always recommended for two-node clusters in production to avoid data divergence (so called “split-brain” situations).