The LINSTOR User Guide

Please Read This First

This guide is intended to serve users of the software-defined storage (SDS) solution LINSTOR® as a definitive reference guide and handbook.

| This guide assumes, throughout, that you are using the latest version of LINSTOR and related tools. |

This guide is organized as follows:

-

An Introduction to LINSTOR is a foundational overview of LINSTOR and provides explanations for LINSTOR concepts and terms.

-

Basic Administrative Tasks and System Setup deals with LINSTOR’s basic functionality and gives you insight into using common administrative tasks. You can also use this chapter as a step-by-step instruction guide to deploy LINSTOR with a basic, minimal setup.

-

Further LINSTOR Tasks shows a variety of advanced and important LINSTOR tasks and configurations, so that you can use LINSTOR in more complex ways.

-

Administering LINSTOR by GUI deals with the graphical client approach to managing LINSTOR clusters that is available to LINBIT® customers.

-

LINSTOR Integrations has chapters that deal with how to implement a LINSTOR based storage solution with various platforms and technologies, such as Kubernetes, Proxmox VE, OpenNebula, Docker, OpenStack, and others, by using the LINSTOR API.

Introduction to LINSTOR

1. An Introduction to LINSTOR

To use LINSTOR® effectively, this “Introduction to LINSTOR” chapter provides an overview of the software, explains how it works and deploys storage, and introduces and explains important concepts and terms to help your understanding.

1.1. An Overview of LINSTOR

LINSTOR is an open source configuration management system, developed by LINBIT® for storage on Linux systems. It manages LVM logical volumes, ZFS ZVOLs, or both, on a cluster of nodes. It uses DRBD® for replication between different nodes and to provide block storage devices to users and applications. Some of its features include snapshots, encryption, and caching of HDD backed data in SSDs.

1.1.1. Where LINSTOR is Used

LINSTOR was originally developed to manage DRBD resources. While you can still use LINSTOR to make managing DRBD more convenient, LINSTOR has evolved and it is often integrated with software stacks higher up to provide persistent storage more easily and more flexibly than would otherwise be possible within those stacks.

LINSTOR can be used on its own or you can integrate it with other platforms, such as Kubernetes, OpenShift, OpenNebula, OpenStack, Proxmox VE, and others. LINSTOR runs on bare-metal on-premise hardware, or you can use it within virtual machines (VMs), containers, clouds, or hybrid environments.

1.1.2. LINSTOR Supported Storage and Related Technologies

LINSTOR can work with the following storage provider and related technologies:

-

LVM and LVM thin volumes

-

ZFS and ZFS thin volumes

-

File and FileThin (loop devices)

-

Diskless

-

Exos [DEPRECATED]

-

SPDK (remote)

-

Microsoft Windows Storage Spaces and thin Storage Spaces

-

EBS (target and initiator)

-

Device mapper cache (

dm-cache) and writecache (dm-writecache) -

bcache

-

LUKS

-

DRBD

By using LINSTOR, you can work with these technologies on their own or else in various meaningful combinations.

1.2. How LINSTOR Works

A working LINSTOR setup requires one active controller node that runs the LINSTOR

controller software as a systemd service, linstor-controller.service. This is the LINSTOR

control plane, where the LINSTOR controller node communicates with LINSTOR satellite nodes.

The setup also requires one or more satellite nodes that run the LINSTOR satellite software as a

systemd service, linstor-satellite.service. The LINSTOR satellite service facilitates storage and related actions on the node, for example creating storage volumes to provide data storage to users and applications. However, satellite nodes do not have to provide

physical storage to the cluster. For example, you can have diskless satellite nodes that

participate in the LINSTOR cluster for DRBD quorum purposes.

| It is also possible for a node to run both the LINSTOR controller and satellite services and act in a Combined role. |

You can think of the storage technologies as implemented on LINSTOR satellite nodes, for example, DRBD replication, as the data plane. With LINSTOR, the control and data planes are separate and can function independently. This means, for example, that you can update the LINSTOR controller node or the LINSTOR controller software while your LINSTOR satellite nodes continue to provide (and replicate if using DRBD) storage to users and applications without interruption.

For convenience, a LINSTOR setup is often called a LINSTOR cluster in this guide, even though a valid LINSTOR setup can exist as an integration within another platform, such as Kubernetes.

Users can interact with LINSTOR by using either a CLI-based client or a graphical user interface (GUI). Both of these interfaces make use of the LINSTOR REST API. LINSTOR can integrate with other platforms and applications by using plugins or drivers that also make use of this API.

Communication between the LINSTOR controller and the REST API happens via TCP/IP and can be secured by using SSL/TLS.

The southbound drivers that LINSTOR uses to interface with physical storage are LVM, thinLVM and ZFS.

1.3. Installable Components

A LINSTOR setup has three installable components:

-

LINSTOR controller

-

LINSTOR satellite

-

LINSTOR user interfaces (LINSTOR client and LINBIT SDS GUI)

These installable components are either source code that you can compile, or else prebuilt packages, that you can use to install and run the software.

1.3.1. LINSTOR Controller

The linstor-controller service relies on a database that holds all the configuration

information for the whole cluster. A node or container running the LINSTOR controller software is responsible for resource placement,

resource configuration, and orchestration of any operational processes that require a view of

the whole cluster.

Multiple controllers can be used for LINSTOR, for example, when setting up a highly available LINSTOR cluster, but only one controller can be active.

As mentioned earlier, the LINSTOR controller operates on a separate plane from the data plane that it manages. You can stop the controller service, update or reboot the controller node, and still have access to your data hosted on the LINSTOR satellite nodes. While you can still access and serve the data on your LINSTOR satellite nodes, without a running controller node, you will not be able to perform any LINSTOR status or management tasks on the satellite nodes.

1.3.2. LINSTOR Satellite

The linstor-satellite service runs on each node where LINSTOR consumes local storage or

provides storage to services. It is stateless and receives all the information it needs from the

node or container running the LINSTOR controller service. The LINSTOR satellite service runs programs like lvcreate and drbdadm. It acts

like an agent on a node or in a container that carries out instructions that it receives from the LINSTOR controller node or container.

1.3.3. LINSTOR User Interfaces

When you need to interface with LINSTOR, you can send instructions to the active LINSTOR controller by using one of its user interfaces (UIs): the LINSTOR client, or the LINBIT SDS GUI.

Both of these UIs rely on the LINSTOR REST API.

LINSTOR Client

The LINSTOR client, linstor, is a command line utility that you can use to issue commands to

the active LINSTOR controller node. These commands can be action-oriented, such as commands that

create or modify storage resources in your cluster, or they can be status commands to glean

information about the current state of your LINSTOR cluster.

You can use the LINSTOR client either by entering linstor followed by valid commands and

arguments, or in the client’s interactive mode, by entering linstor on its own.

You can find more information about using the LINSTOR client in the Using the LINSTOR Client section in this user guide.

# linstor Use "help <command>" to get help for a specific command. Available commands: - advise (adv) - backup (b) - controller (c) - drbd-proxy (proxy) - encryption (e) - error-reports (err) - exos - file (f) - help - interactive - key-value-store (kv) - list-commands (commands, list) - node (n) - node-connection (nc) - physical-storage (ps) - remote - resource (r) - resource-connection (rc) - resource-definition (rd) - resource-group (rg) - schedule (sched) - snapshot (s) - sos-report (sos) - space-reporting (spr) - storage-pool (sp) - volume (v) - volume-definition (vd) - volume-group (vg) LINSTOR ==>

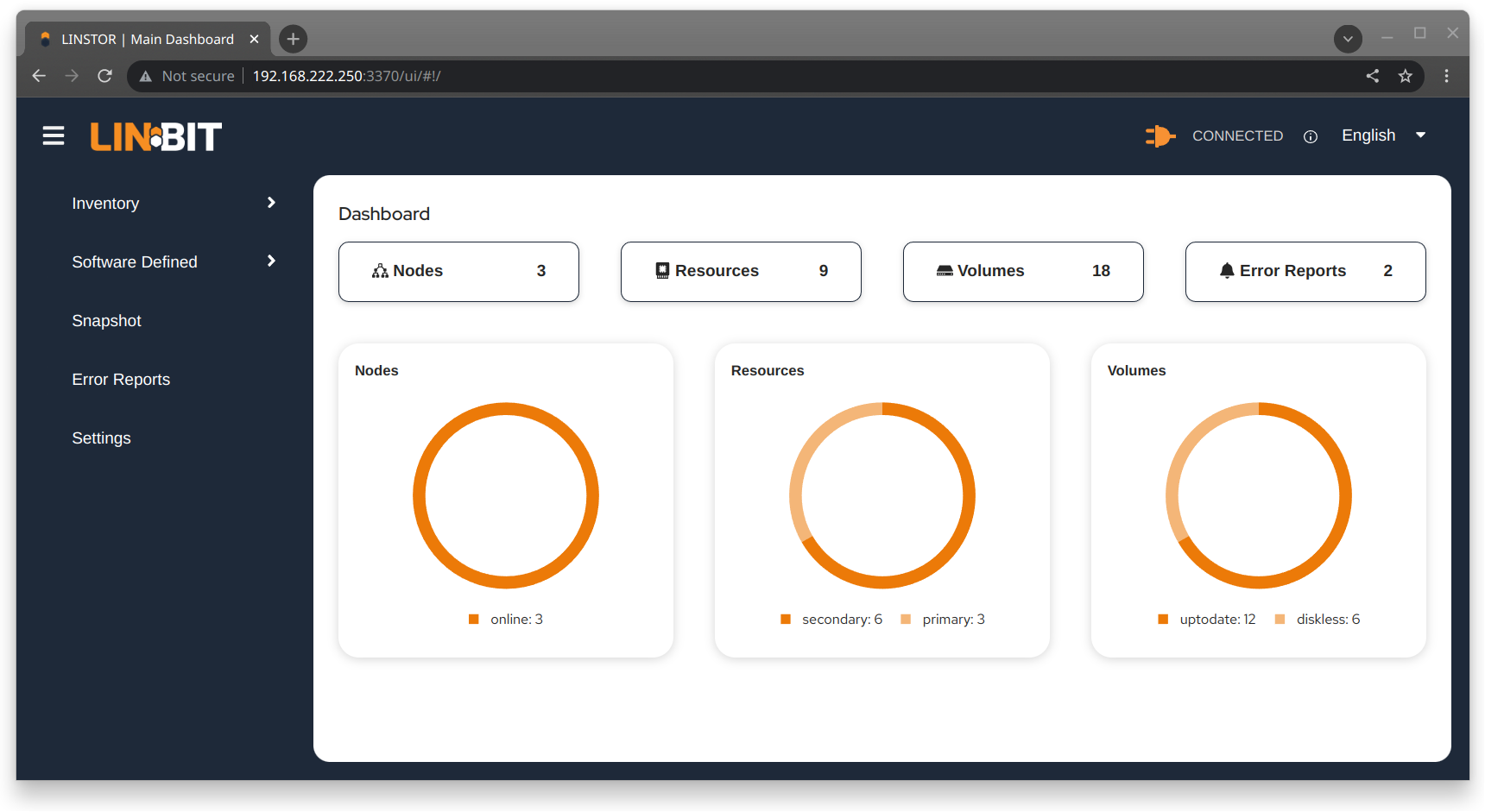

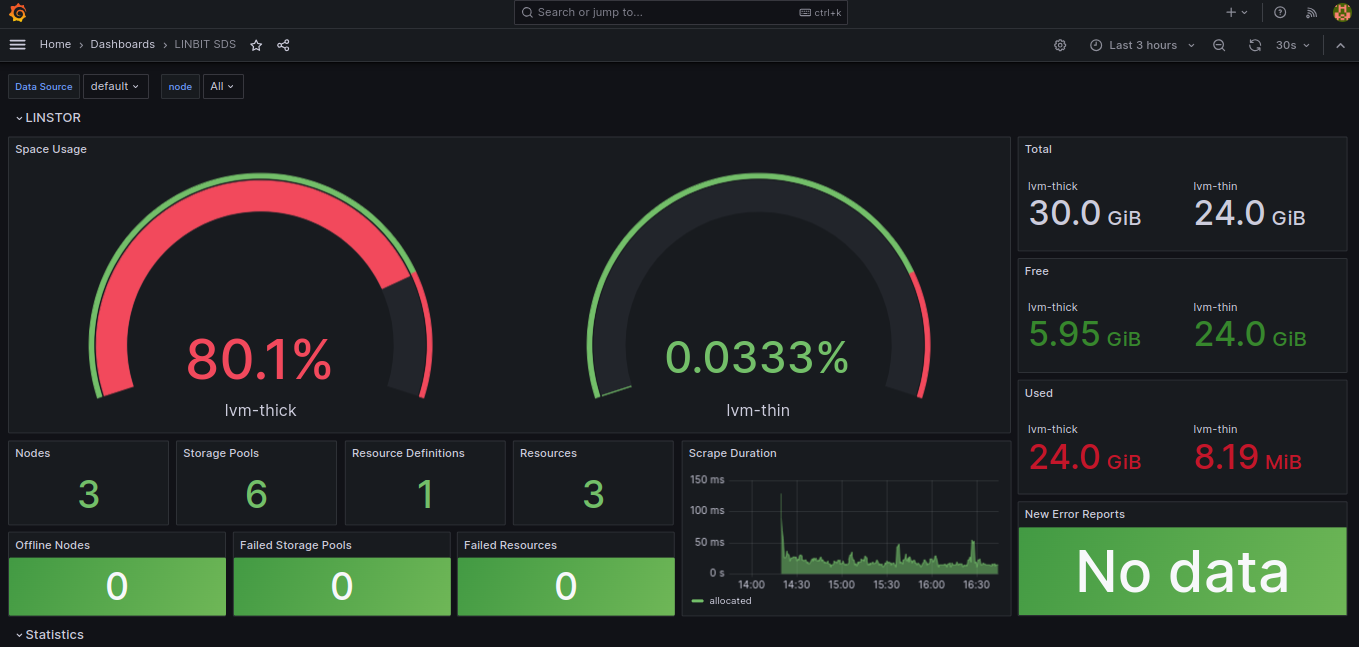

LINBIT SDS Graphical User Interface

The LINBIT SDS graphical user interface (GUI) is a web-based GUI that you can use to work with LINSTOR. It can be a convenient way to navigate and get overview information about a LINSTOR cluster, or add, modify, or delete LINSTOR objects within a cluster. For example, you can add nodes, add or delete resources, or do other tasks.

You can find more information about using the GUI interface in the LINBIT SDS GUI chapter in this user guide.

1.4. Internal Components

The internal components of LINSTOR are abstractions of the software code that are used to describe how LINSTOR works and how you use it. Examples of internal components would be LINSTOR objects, such as resources or storage pools. Although these are abstractions, you will interact with them in a very real way as you use either the LINSTOR client or GUI to deploy and manage storage.

Along the way, this section also introduces and explains core concepts and terms that you will need to familiarize yourself with to understand how LINSTOR works and how to use it.

1.4.1. LINSTOR Objects

LINSTOR takes an object-oriented approach to software-defined storage (SDS). LINSTOR objects are the end result that LINSTOR presents to the user or application to consume or build upon.

The most commonly used LINSTOR objects are explained below and a full list of objects follows.

Resource

A resource is the LINSTOR object that represents consumable storage that is presented to applications and end users. If LINSTOR is a factory, then a resource is the finished product that it produces. Often, a resource is a DRBD replicated block device but it does not have to be. For example, a resource could be diskless to satisfy DRBD quorum requirements, or it could be an NVMe-oF or EBS initiator.

A resource has the following attributes:

-

The name of the node that the resource exists on

-

The resource definition that the resource belongs to

-

Configuration properties of the resource

Volume

A volume is the closest LINSTOR internal component to physical storage and is a subset of a resource. A resource can have multiple volumes. For example, you might want to have a database stored on slower storage than its transaction log in a MySQL cluster. To accomplish this by using LINSTOR, you could have one volume for the faster transaction log storage media and another for the slower database storage media, and have both under a single “MySQL” resource. By keeping multiple volumes under a single resource you are essentially creating a consistency group.

An attribute that you specify for a volume takes precedence over the same attribute if it is also specified “higher up” in the LINSTOR object hierarchy. This is because, again, a volume is the closest internal LINSTOR object to physical storage.

Node

A Node is a server or container that participates in a LINSTOR cluster. The node object has the following attributes:

-

Name

-

IP address

-

TCP port

-

Node type (controller, satellite, combined, auxiliary)

-

Communication type (plain or SSL/TLS)

-

Network interface type

-

Network interface name

Storage Pool

A storage pool identifies storage that is assignable to other LINSTOR objects, such as LINSTOR resources, resource definitions, or resource groups, and can be consumed by LINSTOR volumes.

A storage pool defines:

-

The storage back-end driver to use for the storage pool on the cluster node, for example, LVM, thin-provisioned LVM, ZFS, and others

-

The node that the storage pool exists on

-

The storage pool name

-

Configuration properties of the storage pool

-

Additional parameters to pass to the storage pool’s back-end driver (LVM, ZFS, and others)

A List of LINSTOR Objects

LINSTOR has the following core objects:

EbsRemote |

ResourceConnection |

SnapshotVolumeDefinition |

ExternalFile |

ResourceDefinition |

StorPool |

KeyValueStore |

ResourceGroup |

StorPoolDefinition |

LinstorRemote |

S3Remote |

Volume |

NetInterface |

Schedule |

VolumeConnection |

Node |

Snapshot |

VolumeDefinition |

NodeConnection |

SnapshotDefinition |

VolumeGroup |

Resource |

SnapshotVolume |

1.4.2. Definition and Group Objects

While definitions and groups are also LINSTOR objects, they are a special kind. Definition and group objects can be thought of as profiles or templates. These template objects are used to create child objects that will inherit their parent object’s attributes. They might also have attributes that can affect child objects but are not attributes of the child objects themselves. These attributes could be things such as the TCP port to use for DRBD replication or the volume number that a DRBD resource should use.

Definitions

Definitions define the attributes of an object. Objects created from a definition will inherit the configuration attributes defined in the definition. A definition must be created before you can create an associated child object. For example, you must create a resource definition prior to creating the corresponding resource.

There are two LINSTOR definition objects that you can create directly, by using the LINSTOR client: resource definitions and volume definitions.

- Resource definition

-

Resource definitions can define the following attributes of a resource:

-

The resource group that the resource definition belongs to

-

The name of a resource (implicitly, by virtue of the resource definition’s name)

-

The TCP port to use for the resource’s connection, for example, when DRBD is replicating data

-

Other attributes such as a resource’s storage layers, peer slots, and external name.

-

- Volume definition

-

Volume definitions can define the following:

-

The size of the storage volume

-

The volume number of the storage volume (because a resource can have multiple volumes)

-

The metadata properties of the volume

-

The minor number to use for the DRBD device, if the volume is associated DRBD replicated storage

-

In addition to these definitions, LINSTOR has some indirect definitions: the storage pool definition, the snapshot definition, and the snapshot volume definition. LINSTOR creates these automatically when you create the respective object.

Groups

Groups are similar to definitions in that they are like profiles or templates. Where definitions apply to LINSTOR object instances, groups apply to object definitions. As the name implies, a group can apply to multiple object definitions, just as a definition can apply to multiple object instances. For example, you can have a resource group that defines resource attributes for a frequently needed storage use case. You can then use the resource group to easily spawn (create) multiple resources that need to have those attributes, without having to specify the attributes every time you create a resource.

- Resource group

-

A resource group is a parent object of a resource definition where all property changes made on a resource group will be inherited by its resource definition children[1]. The resource group also stores settings for automatic placement rules and can spawn a resource definition depending on the stored rules.

A resource group defines characteristics of its resource definition child objects. A resource spawned from the resource group, or created from a resource definition that belongs to the resource group, will be a “grandchild” object of a resource group and the “child” of a resource definition. Every resource definition that you create will be a member of the default LINSTOR resource group,

DfltRscGrp, unless you specify another resource group when creating the resource definition.Changes to a resource group will be applied to all resources or resource definitions that were created from the resource group, retroactively, unless the same characteristic has been set on a child object, for example, a resource definition or resource that was created from the resource group.

All of this makes using resource groups a powerful tool to efficiently manage a large number of storage resources. Rather than creating or modifying individual resources, you can simply configure a resource group parent, and all the child resource objects will receive the configuration.

- Volume group

-

Similarly, volume groups are like profiles or templates for volume definitions. A volume group must always reference a specific resource group. In addition, a volume group can define a volume number, and a “gross” volume size.

1.5. LINSTOR Object Hierarchy

As alluded to in previous subsections of this chapter, there is a concept of hierarchy among LINSTOR objects. Depending on the context, this can be described either as a parent-child relationship, or else as a higher-lower relationship where lower means closer to the physical storage layer[2].

A child object will inherit attributes that are defined on its parent objects, if the same attributes are not already defined on the child object. Similarly, a lower object will receive attributes that are set on higher objects, if the same attributes are not already defined on the lower object.

1.5.1. General Rules for Object Hierarchy in LINSTOR

The following are some general rules for object hierarchy in LINSTOR:

-

A LINSTOR object can only receive or inherit attributes that can be set on that object.

-

Lower objects receive attributes set on higher objects.

-

An attribute set on a lower object takes precedence over the same attribute set on higher objects.

-

Child objects inherit attributes set on parent objects.

-

An attribute set on a child object takes precedence over the same attribute set on parent objects.

1.5.2. Using Diagrams to Show Relationships Between LINSTOR Objects

This section uses diagrams to represent the hierarchical relationships between some of the most frequently used LINSTOR objects. Because of the number of LINSTOR objects and their interconnectedness, multiple diagrams are shown first rather than a single diagram, to simplify the conceptualization.

The next diagram shows the relationships between LINSTOR group objects on a single satellite node.

While the two preceding diagrams show higher-lower relationships between common LINSTOR objects, you can also think of some LINSTOR objects as having parent-child relationships. The next diagram introduces this kind of relationship between LINSTOR objects by using a storage pool definition (parent object) and a storage pool (child object) as an example. A parent object can have multiple child objects, as shown in the following diagram.

Having introduced the concept of parent-child relationships in a conceptual diagram, the next diagram is a modified version of the second diagram with some of those relationships added for groups and definitions. This modified diagram also incorporates some of the higher-lower relationships that were shown in the first diagram.

The next diagram synthesizes the relationship concepts of the preceding diagrams while also introducing new LINSTOR objects related to snapshots and connections. With the many objects and criss-crossing lines, the reason for building up to this diagram should be apparent.

Even with its seeming complexity, the preceding diagram is still a simplification and not an all-encompassing representation of the possible relationships between LINSTOR objects. As listed earlier, there are more LINSTOR objects than are shown in the diagram[3].

The good news is that you do not need to memorize the preceding diagram to work with LINSTOR. It could be useful to refer to though if you are trying to troubleshoot attributes that you have set on LINSTOR objects and their inheritance and effects on other LINSTOR objects in your cluster.

Administering LINSTOR

2. Basic Administrative Tasks and System Setup

This is a how-to style chapter that covers basic LINSTOR® administrative tasks, including installing LINSTOR and how to get started using LINSTOR.

2.1. Before Installing LINSTOR

Before you install LINSTOR, there are a few things that you should be aware of that might affect how you install LINSTOR.

2.1.1. Packages

LINSTOR is packaged in both the RPM and the DEB variants:

-

linstor-clientcontains the command line client program. It depends on Python which is usually already installed. In RHEL 8 systems you will need to symlinkpython. -

linstor-controllerandlinstor-satelliteBoth contain systemd unit files for the services. They depend on Java runtime environment (JRE) version 1.8 (headless) or higher.

For further details about these packages see the Installable Components section above.

| If you have a LINBIT® support subscription, you will have access to certified binaries through LINBIT customer-only repositories. |

2.1.2. FIPS Compliance

This standard shall be used in designing and implementing cryptographic modules…

You can configure LINSTOR to encrypt storage volumes, by using LUKS (dm-crypt), as detailed in

the Encrypted Volumes section of this user guide. Refer to

the LUKS and dm-crypt projects for FIPS compliance status.

You can also configure LINSTOR to encrypt communication traffic between a LINSTOR satellite and a LINSTOR controller, by using SSL/TLS, as detailed in the Secure Satellite Connections section of this user guide.

LINSTOR can also interface with Self-Encrypting Drives (SEDs) and you can use LINSTOR to initialize an SED drive. LINSTOR stores the drive’s password as a property that applies to the storage pool associated with the drive. LINSTOR encrypts the SED drive password by using the LINSTOR master passphrase that you must create first.

By default, LINSTOR uses the following cryptographic algorithms:

-

HMAC-SHA2-512

-

PBKDF2

-

AES-128

A FIPS compliant version of LINSTOR is available for the use cases mentioned in this section. If you or your organization require FIPS compliance at this level, contact [email protected] for details.

2.2. Installing LINSTOR

| If you want to use LINSTOR in containers, skip this section and use the Containers section below for the installation. |

2.2.1. Installing a Volume Manager

To use LINSTOR to create storage volumes, you will need to install a volume manager, either LVM or ZFS, if one is not already installed on your system.

2.2.2. Using a Script to Manage LINBIT Cluster Nodes

If you are a LINBIT® customer, you can download a LINBIT created helper script and run it on your nodes to:

-

Register a cluster node with LINBIT.

-

Join a node to an existing LINBIT cluster.

-

Enable LINBIT package repositories on your node.

Enabling LINBIT package repositories will give you access to LINBIT software packages, DRBD® kernel modules, and other related software such as cluster managers and OCF scripts. You can then use a package manager to fetch, install, and manage updating installed packages.

Downloading the LINBIT Manage Node Script

To register your cluster nodes with LINBIT, and configure LINBIT’s repositories, first download and then run the manage node helper script by entering the following commands on all cluster nodes:

# curl -O https://my.linbit.com/linbit-manage-node.py # chmod +x ./linbit-manage-node.py # ./linbit-manage-node.py

You must run the script as the root user.

|

The script will prompt you for your LINBIT customer portal username and password. After entering your credentials, the script will list cluster nodes associated with your account (none at first).

Enabling LINBIT Package Repositories

After you specify which cluster to register the node with, have the script write the

registration data to a JSON file when prompted. Next, the script will show you a list of LINBIT

repositories that you can enable or disable. You can find LINSTOR and other related packages

in the drbd-9 repository. In most cases, unless you have a need to be on a different DRBD

version branch, you should enable at least this repository.

Final Tasks Within Manage Nodes Script

After you have finished making your repositories selection, you can write the configuration to a file by following the script’s prompting. Next, be sure to answer yes to the question about installing LINBIT’s public signing key to your node’s keyring.

Before it closes, the script will show a message that suggests different packages that you can install for different use cases.

On DEB based systems you can install a precompiled DRBD kernel module package,

drbd-module-$(uname -r), or a source version of the kernel module, drbd-dkms. Install one

or the other package but not both.

|

2.2.3. Using a Package Manager to Install LINSTOR

After registering your node and enabling the drbd-9 LINBIT package repository, you can use a

DEB, RPM, or YaST2 based package manager to install LINSTOR and related components.

If you are using a DEB based package manager, refresh your package repositories list

by entering: apt update, before proceeding.

|

Installing DRBD Packages for Replicated LINSTOR Storage

| If you will be using LINSTOR without DRBD, you can skip installing these packages. |

If you want to be able to use LINSTOR to create DRBD replicated storage, you will need to install the required DRBD packages. Depending on the Linux distribution that you are running on your node, install the DRBD-related packages that the helper script suggested. If you need to review the script’s suggested packages and installation commands, you can enter:

# ./linbit-manage-node.py --hints

Installing LINSTOR Packages

To install LINSTOR on a controller node, use your package manager to install the

linbit-sds-controller package.

To install LINSTOR on a satellite node, use your package manager to install the

linbit-sds-satellite package.

Install both packages if your node will be both a satellite and controller (Combined role).

2.2.4. Installing LINSTOR from Source Code

The LINSTOR project’s GitHub page is here: https://github.com/LINBIT/linstor-server.

LINBIT also has downloadable archived files of source code for LINSTOR, DRBD, and more, available here: https://linbit.com/linbit-software-download-page-for-linstor-and-drbd-linux-driver/.

2.3. Upgrading LINSTOR

LINSTOR does not support rolling upgrades.

LINSTOR controller and satellite services running in your cluster must have the same version, otherwise the controller will discard the satellite with a VERSION_MISMATCH.

While this is a situation you should fix, it is not a risk for your data.

Satellite nodes will not do any actions so long as they are not connected to a controller node, and

because of LINSTOR data and control plane separation, this state does not disrupt DRBD data replication.

2.3.1. Backing up the controller database before upgrading

Before upgrading LINSTOR, you should back up the LINSTOR controller database.

If you are using the embedded default H2 database and you upgrade the linstor-controller package, an automatic backup file of the database will be created in the default /var/lib/linstor

directory. This file is a good restore point if a linstor-controller database

migration should fail. It is recommended to report the error to LINBIT and restore the old

database file and roll back to your previous controller version.

If you use any external database or etcd, make a manual backup of your current database, before upgrading LINSTOR. You can use this backup as a restore point if needed.

2.3.2. Upgrading LINSTOR on RPM-based Linux

On RPM-based Linux distributions, LINSTOR services are not automatically restarted when you upgrade LINSTOR packages. For this reason, the upgrading process does not entail as much downtime for your LINSTOR services (LINSTOR control plane) as it might on other systems.

On all nodes, update applicable LINSTOR packages. Modify the command below to only update packages applicable to each node’s role in your LINSTOR cluster:

dnf update -y linstor-client linstor-controller linstor-satellite

After updating LINSTOR packages on all nodes, as simultaneously as you can, restart the LINSTOR satellite service on all your satellite nodes, except for the actively running controller node if that node is in a combined role.

systemctl restart linstor-satellite

Next, restart the LINSTOR controller service on the node where the service is actively running. If that node is in a combined role, also update the LINSTOR satellite service, for example:

systemctl restart linstor-satellite linstor-controller

If you have made the LINSTOR controller service highly available in your cluster, you will need to update the linstor-controller package on all potential LINSTOR controller nodes.

However, you only need to restart the LINSTOR controller service on the node currently running the service.

|

2.3.3. Upgrading LINSTOR on DEB-based Linux

On DEB-based Linux systems, because LINSTOR services will restart automatically after installing an updated version, you will need to plan for more downtime of the LINSTOR control plane than on an RPM-based system.

First, upgrade the LINSTOR controller software on the active LINSTOR controller node.

apt install -y --only-upgrade linstor-controller

If you have made the LINSTOR controller service highly available in your cluster, you will need to update the linstor-controller package on all potential LINSTOR controller nodes.

|

Upgrading the software will automatically restart the controller service.

At this point, if you enter a linstor node list command, output will show that your satellite nodes are in an OFFLINE(VERSION MISMATCH) state.

Next, update the LINSTOR client and satellite packages on nodes where those packages are installed. Modify the command below to only update packages applicable to each node’s role in your LINSTOR cluster:

apt install -y --only-upgrade linstor-client linstor-satellite

Uprading the LINSTOR satellite software package on your satellite (and combined) nodes will automatically restart the LINSTOR satellite service on those nodes.

2.3.4. Verifying a LINSTOR upgrade

After upgrading all LINSTOR packages applicable to each node in your cluster, verify:

-

The output of a

linstor --versioncommand shows the expected LINSTOR client software version number on all nodes that you run the LINSTOR client on. -

The output of a

linstor controller versioncommand shows the expected LINSTOR controller software version number. If you have made the LINSTOR controller service highly available, you will need to use a package manager query (dnf info linstor-controllerorapt show linstor-controller) to verify the installed software version on the potential controller nodes. -

The output of a

linstor node listcommand shows all LINSTOR satellite nodes are in anOnlinestate. The LINSTOR controller service will log aversion mismatcherror message (journalctl -xeu linstor-controller) for any nodes where the installed LINSTOR satellite software version does not match the controller software version. The output of alinstor node listcommand will show such nodes as being in anOFFLINE(VERSION MISMATCH)state.

2.4. Containers

LINSTOR and related software are also available as containers. The base images are available

in LINBIT’s container registry, drbd.io.

| LINBIT’s container image repository (http://drbd.io) is only available to LINBIT customers or through LINBIT customer trial accounts. Contact LINBIT for information on pricing or to begin a trial. Alternatively, you can use LINSTOR SDS’ upstream project named Piraeus, without being a LINBIT customer. |

To access the images, you first have to login to the registry using your LINBIT Customer Portal credentials.

# docker login drbd.io

The containers available in this repository are:

-

drbd.io/drbd9-rhel8

-

drbd.io/drbd9-rhel7

-

drbd.io/drbd9-sles15sp1

-

drbd.io/drbd9-bionic

-

drbd.io/drbd9-focal

-

drbd.io/linstor-csi

-

drbd.io/linstor-controller

-

drbd.io/linstor-satellite

-

drbd.io/linstor-client

An up-to-date list of available images with versions can be retrieved by opening http://drbd.io

in your browser. Be sure to browse the image repository through HTTP, although the registry’s

images themselves are pulled through HTTPS, using the associated docker pull command.

To load the kernel module, needed only for LINSTOR satellites, you’ll need to run a

drbd9-$dist container in privileged mode. The kernel module containers either retrieve an

official LINBIT package from a customer repository, use shipped packages, or they try to build

the kernel modules from source. If you intend to build from source, you need to have the

according kernel headers (for example, kernel-devel) installed on the host. There are four

ways to execute such a module load container:

-

Building from shipped source

-

Using a shipped/pre-built kernel module

-

Specifying a LINBIT node hash and a distribution.

-

Bind-mounting an existing repository configuration.

Example building from shipped source (RHEL based):

# docker run -it --rm --privileged -v /lib/modules:/lib/modules \ -v /usr/src:/usr/src:ro \ drbd.io/drbd9-rhel7

Example using a module shipped with the container, which is enabled by not bind-mounting

/usr/src:

# docker run -it --rm --privileged -v /lib/modules:/lib/modules \ drbd.io/drbd9-rhel8

Example using a hash and a distribution (rarely used):

# docker run -it --rm --privileged -v /lib/modules:/lib/modules \ -e LB_DIST=rhel7.7 -e LB_HASH=ThisIsMyNodeHash \ drbd.io/drbd9-rhel7

Example using an existing repository configuration (rarely used):

# docker run -it --rm --privileged -v /lib/modules:/lib/modules \ -v /etc/yum.repos.d/linbit.repo:/etc/yum.repos.d/linbit.repo:ro \ drbd.io/drbd9-rhel7

| In both cases (hash + distribution, and bind-mounting a repository) the hash or repository configuration has to be from a node that has a special property set. Contact LINBIT customer support for help setting this property. |

For now (that is, pre DRBD 9 version “9.0.17”), you must use the containerized DRBD

kernel module, as opposed to loading a kernel module onto the host system. If you intend to use

the containers you should not install the DRBD kernel module on your host systems. For DRBD

version 9.0.17 or greater, you can install the kernel module as usual on the host system, but

you need to load the module with the usermode_helper=disabled parameter (for example,

modprobe drbd usermode_helper=disabled).

|

Then run the LINSTOR satellite container, also privileged, as a daemon:

# docker run -d --name=linstor-satellite --net=host -v /dev:/dev \ --privileged drbd.io/linstor-satellite

net=host is required for the containerized drbd-utils to be able to communicate with

the host-kernel through Netlink.

|

To run the LINSTOR controller container as a daemon, mapping TCP port 3370 on the host to the

container, enter the following command:

# docker run -d --name=linstor-controller -p 3370:3370 drbd.io/linstor-controller

To interact with the containerized LINSTOR cluster, you can either use a LINSTOR client installed on a system using repository packages, or using the containerized LINSTOR client. To use the LINSTOR client container:

# docker run -it --rm -e LS_CONTROLLERS=<controller-host-IP-address> \ drbd.io/linstor-client node list

From this point you would use the LINSTOR client to initialize your cluster and begin creating resources using the typical LINSTOR patterns.

To stop and remove a daemonized container and image:

# docker stop linstor-controller # docker rm linstor-controller

2.5. Initializing Your Cluster

Before initializing your LINSTOR cluster, you must meet the following prerequisites on all cluster nodes:

-

The DRBD 9 kernel module is installed and loaded.

-

The

drbd-utilspackage is installed. -

LVMtools are installed. -

linstor-controllerorlinstor-satellitepackages and their dependencies are installed on appropriate nodes. -

The

linstor-clientis installed on thelinstor-controllernode.

Enable and also start the linstor-controller service on the host where it has been installed:

# systemctl enable --now linstor-controller

2.6. Using the LINSTOR Client

Whenever you run the LINSTOR command line client, it needs to know on which cluster node the

linstor-controller service is running. If you do not specify this, the client will try to

reach a locally running linstor-controller service listening on IP address 127.0.0.1 port

3370. Therefore use the linstor-client on the same host as the linstor-controller.

The linstor-satellite service requires TCP ports 3366 and 3367. The

linstor-controller service requires TCP port 3370. Verify that you have this port allowed on

your firewall.

|

# linstor node list

Output from this command should show you an empty list and not an error message.

You can use the linstor command on any other machine, but then you need to tell the client how

to find the LINSTOR controller. As shown, this can be specified as a command line option, or by

using an environment variable:

# linstor --controllers=alice node list # LS_CONTROLLERS=alice linstor node list

If you have configured HTTPS access to the LINSTOR controller REST API and you want the LINSTOR client to access the controller over HTTPS, then you need to use the following syntax:

# linstor --controllers linstor+ssl://<controller-node-name-or-ip-address> # LS_CONTROLLERS=linstor+ssl://<controller-node-name-or-ip-address> linstor node list

2.6.1. Specifying Controllers in the LINSTOR Configuration File

Alternatively, you can create the /etc/linstor/linstor-client.conf file and add a

controllers= line in the global section.

[global] controllers=alice

If you have multiple LINSTOR controllers configured you can simply specify them all in a comma-separated list. The LINSTOR client will try them in the order listed.

2.6.2. Using LINSTOR Client Abbreviated Notation

You can use LINSTOR client commands in a much faster and convenient way by only entering the

starting letters of the commands, subcommands, or parameters. For example, rather than entering

linstor node list you can enter the LINSTOR short notation command linstor n l.

Entering the command linstor commands will show a list of possible LINSTOR client commands

along with the abbreviated notation for each command. You can use the --help flag with any of

these LINSTOR client commands to get the abbreviated notation for the command’s subcommands.

2.7. Adding Nodes to Your Cluster

After initializing your LINSTOR cluster, the next step is to add nodes to the cluster.

# linstor node create bravo 10.43.70.3

If you omit the IP address, the LINSTOR client will try to resolve the specified node name,

bravo in the preceding example, as a hostname. If the hostname does not resolve to a host on

the network from the system where the LINSTOR controller service is running, then LINSTOR will

show an error message when you try to create the node:

Unable to resolve ip address for 'bravo': [Errno -3] Temporary failure in name resolution

2.8. Listing Nodes in Your Cluster

You can list the nodes that you have added to your LINSTOR cluster by entering the following command:

# linstor node list

Output from the command will show a table listing the nodes in your LINSTOR cluster, along with information such as the node type assigned to the node, the node’s IP address and port used for LINSTOR communication, and the node’s state.

╭────────────────────────────────────────────────────────────╮ ┊ Node ┊ NodeType ┊ Addresses ┊ State ┊ ╞════════════════════════════════════════════════════════════╡ ┊ bravo ┊ SATELLITE ┊ 10.43.70.3:3366 (PLAIN) ┊ Offline ┊ ╰────────────────────────────────────────────────────────────╯

2.8.1. Naming LINSTOR Nodes

If you specify an IP address when you create a LINSTOR node, you can give your node an arbitrary name. The LINSTOR client will show an INFO message about this when you create the node:

[...] 'arbitrary-name' and hostname 'node-1' doesn't match.

LINSTOR will automatically detect the created node’s local uname --nodename which will be

later used for DRBD resource configurations, rather than the arbitrary node name. To avoid

confusing yourself and possibly others, in most cases, it would make sense to just use a node’s

hostname when creating a LINSTOR node.

2.8.2. Starting and Enabling a LINSTOR Satellite Node

When you use linstor node list LINSTOR will show that the new node is marked as offline. Now

start and enable the LINSTOR satellite service on the new node so that the service comes up on

reboot as well:

# systemctl enable --now linstor-satellite

About 10 seconds later you will see the status in linstor node list as online. Of course

the satellite process might be started before the controller knows about the existence of the

satellite node.

In case the node which hosts your controller should also contribute storage to the LINSTOR

cluster, you have to add it as a node and also start the linstor-satellite service.

|

If you want to have other services wait until the LINSTOR satellite service had a chance to

create the necessary devices (that is, after a boot), you can update the corresponding

.service file and change Type=simple to Type=notify.

This will cause the satellite to delay sending the READY=1 message to systemd until the

controller connects, sends all required data to the satellite and the satellite at least tried

once to get the devices up and running.

2.8.3. Specifying LINSTOR Node Types

When you create a LINSTOR node, you can also specify a node type. Node type is a label that

indicates the role that the node serves within your LINSTOR cluster. Node type can be one of

controller, auxiliary, combined, or satellite. For example to create a LINSTOR node and

label it as a controller and a satellite node, enter the following command:

# linstor node create bravo 10.43.70.3 --node-type combined

The --node-type argument is optional. If you do not specify a node type when you create a

node, LINSTOR will use a default type of satellite.

If you want to change a LINSTOR node’s assigned type after creating the node, you can enter a

linstor node modify --node-type command.

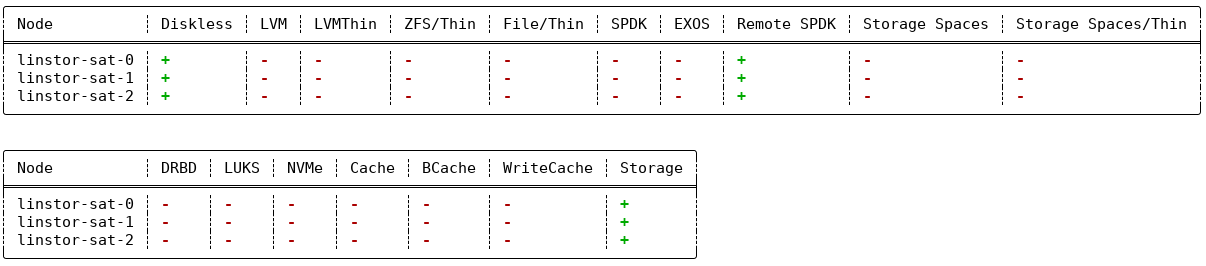

2.8.4. Listing Supported Storage Providers and Storage Layers

Before you use LINSTOR to create storage objects in your cluster, or in case you are in a

troubleshooting situation, you can list the storage providers and storage layers that are

supported on the satellite nodes in your cluster. To do this, you can use the node info

command.

# linstor node info

Output from the command will show two tables. The first table will show the available

LINSTOR storage provider back ends. The second table will show the

available LINSTOR storage layers. Both tables will indicate whether a

given node supports the storage provider or storage layer. A plus sign, +, indicate “is

supported”. A minus sign, -, indicates “is not supported”.

Example output for a cluster might be similar to the following:

linstor node info command2.9. Storage Pools

StoragePools identify storage in the context of LINSTOR. To group storage pools from multiple nodes, simply use the same name on each node. For example, one valid approach is to give all SSDs one name and all HDDs another.

2.9.1. Creating Storage Pools

On each host contributing storage, you need to create either an LVM volume group (VG) or a ZFS zPool. The VGs and zPools identified with one LINSTOR storage pool name might have different VG or zPool names on the hosts, but do yourself a favor, for coherency, use the same VG or zPool name on all nodes.

|

When using LINSTOR together with LVM and DRBD, set the global_filter = [ "r|^/dev/drbd|" ] This setting tells LVM to reject DRBD devices from operations such as scanning or opening attempts. In some cases, not setting this filter might lead to increased CPU load or stuck LVM operations. |

# vgcreate vg_ssd /dev/nvme0n1 /dev/nvme1n1 [...]

After creating a volume group on each of your nodes, you can create a storage pool that is backed by the volume group on each of your nodes, by entering the following commands:

# linstor storage-pool create lvm alpha pool_ssd vg_ssd # linstor storage-pool create lvm bravo pool_ssd vg_ssd

To list your storage pools you can enter:

# linstor storage-pool list

or using LINSTOR abbreviated notation:

# linstor sp l

2.9.2. Using Storage Pools To Confine Failure Domains to a Single Back-end Device

In clusters where you have only one kind of storage and the capability to hot swap storage devices, you might choose a model where you create one storage pool per physical backing device. The advantage of this model is to confine failure domains to a single storage device.

2.9.3. Sharing Storage Pools with Multiple Nodes

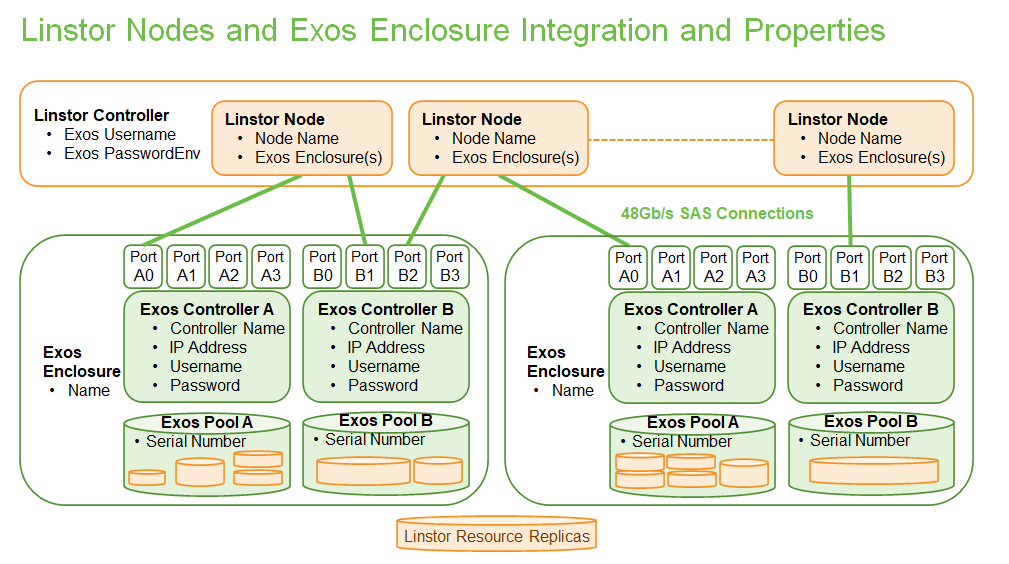

Both the Exos and LVM2 storage providers offer the option of multiple server nodes directly

connected to the storage array and drives. You can use the

LINSTOR storage-pool create command option --shared-space when configuring a LINSTOR pool to

use the same LVM2 volume group accessible by two or more nodes.

| Just as you should not modify or create any logical volume in a volume group that is used as the back end for a LINSTOR storage pool, it is even more important not to do these actions with shared volume groups. Calling any LVM command, for example, from an interactive shell, on a shared volume group, where the coordination of LVM commands is done by LINSTOR, can output inconsistent information or even destroy LVM metadata. Automatic activation of LVM logical volumes, for example, at boot time, also falls under this warning. Do not do these things. Use LINSTOR to manage LVM logical volumes through abstracted LINSTOR objects in these cases. |

The following example shows

using the LVM2 volume group UUID as the shared space identifier for a storage pool accessible by

nodes alpha and bravo:

# linstor storage-pool create lvm --external-locking \ --shared-space O1btSy-UO1n-lOAo-4umW-ETZM-sxQD-qT4V87 \ alpha pool_ssd shared_vg_ssd # linstor storage-pool create lvm --external-locking \ --shared-space O1btSy-UO1n-lOAo-4umW-ETZM-sxQD-qT4V87 \ bravo pool_ssd shared_vg_ssd

This example also uses the --external-locking option. By using this option, you tell LINSTOR

not to use its internal locking mechanism for shared storage pools but rather use LVM’s

mechanism in this example.

With LVM2, the external locking service (lvmlockd) manages volume groups created

with the vgcreate command’s --shared option. To use the LINSTOR --external-locking option, you

need to have specified the LVM --shared option when entering a vgcreate command to create the

LVM volume group that backs the LINSTOR storage pool.

|

You can omit both the --shared option for vgcreate and the --external-locking option for

linstor storage-pool create. If you do this, LINSTOR will use its own mechanism to take care

that only one node in the same --shared-space grouping will use LVM. Using LINSTOR’s internal

mechanism to lock LVM use will not however limit the access to single volumes. Neither LINSTOR’s

nor LVM’s locking mechanism, for example, will limit DRBD I/O.

Exos pools will use the Exos pool serial number by default for the --shared-space identifier.

As of the release of linstor-server v1.26.0, the Exos integration for LINSTOR is

deprecated.

|

2.9.4. Creating Storage Pools by Using the Physical Storage Command

Since linstor-server 1.5.2 and a recent linstor-client, LINSTOR can create LVM/ZFS pools on

a satellite for you. The LINSTOR client has the following commands to list possible disks and

create storage pools, but such LVM/ZFS pools are not managed by LINSTOR and there is no delete

command, so such action must be done manually on the nodes.

# linstor physical-storage list

Will give you a list of available disks grouped by size and rotational(SSD/Magnetic Disk).

It will only show disks that pass the following filters:

-

The device size must be greater than 1GiB.

-

The device is a root device (not having children), for example,

/dev/vda,/dev/sda. -

The device does not have any file system or other

blkidmarker (wipefs -amight be needed). -

The device is not a DRBD device.

With the create-device-pool command you can create a LVM pool on a disk and also directly

add it as a storage pool in LINSTOR.

# linstor physical-storage create-device-pool --pool-name lv_my_pool \ LVMTHIN node_alpha /dev/vdc --storage-pool newpool

If the --storage-pool option was provided, LINSTOR will create a storage pool with the given

name.

For more options and exact command usage refer to the LINSTOR client --help text.

2.9.5. Mixing Storage Pools

With some setup and configuration, you can use storage pools of different storage provider types to back a LINSTOR resource. This is called storage pool mixing. For example, you might have a storage pool on one node that uses an LVM thick-provisioned volume while on another node you have a storage pool that uses a thin-provisioned ZFS zpool.

Because most LINSTOR deployments will use homogenous storage pools to back resources, storage pool mixing is only mentioned here so that you know that the feature exists. It might be a useful feature when migrating storage resources, for example. You can find further details about this, including prerequisites, in Mixing Storage Pools of Different Storage Providers.

2.10. Using Resource Groups to Deploy LINSTOR Provisioned Volumes

Using resource groups to define how you want your resources provisioned should be considered the de facto method for deploying volumes provisioned by LINSTOR. Chapters that follow which describe creating each resource from a resource definition and volume definition should only be used in special scenarios.

| Even if you choose not to create and use resource groups in your LINSTOR cluster, all resources created from resource definitions and volume definitions will exist in the ‘DfltRscGrp’ resource group. |

A simple pattern for deploying resources using resource groups would look like this:

# linstor resource-group create my_ssd_group --storage-pool pool_ssd --place-count 2 # linstor volume-group create my_ssd_group # linstor resource-group spawn-resources my_ssd_group my_ssd_res 20G

The commands above would result in a resource named ‘my_ssd_res’ with a 20GB volume replicated twice being automatically provisioned from nodes who participate in the storage pool named ‘pool_ssd’.

A more useful pattern could be to create a resource group with settings you’ve determined are optimal for your use case. Perhaps you have to run nightly online verifications of your volumes’ consistency, in that case, you could create a resource group with the ‘verify-alg’ of your choice already set so that resources spawned from the group are pre-configured with ‘verify-alg’ set:

# linstor resource-group create my_verify_group --storage-pool pool_ssd --place-count 2

# linstor resource-group drbd-options --verify-alg crc32c my_verify_group

# linstor volume-group create my_verify_group

# for i in {00..19}; do

linstor resource-group spawn-resources my_verify_group res$i 10G

done

The commands above result in twenty 10GiB resources being created each with the crc32c

verify-alg pre-configured.

You can tune the settings of individual resources or volumes spawned from resource groups by

setting options on the respective resource definition or volume definition LINSTOR objects. For

example, if res11 from the preceding example is used by a very active database receiving many

small random writes, you might want to increase the al-extents for that specific resource:

# linstor resource-definition drbd-options --al-extents 6007 res11

If you configure a setting in a resource definition that is already configured on the

resource group it was spawned from, the value set in the resource definition will override

the value set on the parent resource group. For example, if the same res11 was required to

use the slower but more secure sha256 hash algorithm in its verifications, setting the

verify-alg on the resource definition for res11 would override the value set on the

resource group:

# linstor resource-definition drbd-options --verify-alg sha256 res11

| A guiding rule for the hierarchy in which settings are inherited is that the value “closer” to the resource or volume wins. Volume definition settings take precedence over volume group settings, and resource definition settings take precedence over resource group settings. |

2.12. Creating and Deploying Resources and Volumes

You can use the LINSTOR create command to create various LINSTOR objects, such as resource

definitions, volume definitions, and resources. Some of these commands are shown below.

In the following example scenario, assume that you have a goal of creating a resource named

backups with a size of 500GiB that is replicated among three cluster nodes.

First, create a new resource definition:

# linstor resource-definition create backups

Second, create a new volume definition within that resource definition:

# linstor volume-definition create backups 500G

If you want to resize (grow or shrink) the volume definition you can do that by specifying a new

size with the set-size command:

# linstor volume-definition set-size backups 0 100G

| Since version 1.8.0, LINSTOR supports shrinking volume definition size, even for deployed resources, if the resource’s storage layers support it. Use caution when shrinking volume definition sizes for resources with data. Data loss can occur if you do not take cautionary measures such as making backups and shrinking the file system on top of the volume first. |

The parameter 0 is the number of the volume in the resource backups. You have to provide

this parameter because resources can have multiple volumes that are identified by a so-called

volume number. You can find this number by listing the volume definitions (linstor vd l). The

list table will show volume numbers for the listed resources.

So far you have only created definition objects in LINSTOR’s database. However, not a single logical volume (LV) has been created on the satellite nodes. Now you have the choice of delegating the task of deploying resources to LINSTOR or else doing it yourself.

2.12.1. Manually Placing Resources

With the resource create command you can assign a resource definition to named nodes

explicitly.

# linstor resource create alpha backups --storage-pool pool_hdd # linstor resource create bravo backups --storage-pool pool_hdd # linstor resource create charlie backups --storage-pool pool_hdd

2.12.2. Automatically Placing Resources

When you create (spawn) a resource from a resource group, it is possible to have LINSTOR automatically select nodes and storage pools to deploy the resource to. You can use the arguments mentioned in this section to specify constraints when you create or modify a resource group. These constraints will affect how LINSTOR automatically places resources that are deployed from the resource group.

Automatically Maintaining Resource Group Placement Count

Starting with LINSTOR version 1.26.0, there is a reoccurring LINSTOR task that tries to maintain the placement count set on a resource group for all deployed LINSTOR resources that belong to that resource group. This includes the default LINSTOR resource group and its placement count.

If you want to disable this behavior, set the BalanceResourcesEnabled property to false on the

the LINSTOR controller, LINSTOR resource groups that your resources belong to, or the resource

definitions themselves. Due to LINSTOR object hierarchy, if you set a property on a resource

group, it will override the property value on the LINSTOR controller. Likewise, if you set a

property on a resource definition, it will override the property value on the resource group

that the resource definition belongs to.

There are other additional properties related to this feature that you can set on the LINSTOR controller:

BalanceResourcesInterval-

The interval in seconds, at the LINSTOR controller level, that the balance resource placement task is triggered. By default, the interval is 3600 seconds (one hour).

BalanceResourcesGracePeriod-

The period in seconds for how long new resources (after being created or spawned) are ignored for balancing. By default, the grace period is 3600 seconds (one hour).

Placement Count

By using the --place-count <replica_count> argument when you create or modify a resource

group, you can specify on how many nodes in your cluster LINSTOR should place diskful resources

created from the resource group.

|

Creating a resource group with impossible placement constraints

You can create or modify a resource group and specify a placement count or other constraint that

would be impossible for LINSTOR to fulfill. For example, you could specify a placement count of

ERROR:

Description:

Not enough available nodes

[...]

|

Storage Pool Placement

In the following example, the value after the --place-count option tells LINSTOR how many

replicas you want to have. The --storage-pool option should be obvious.

# linstor resource-group create backups --place-count 3 --storage-pool pool_hdd

What might not be obvious is that you can omit the --storage-pool option. If you do this, then

LINSTOR can select a storage pool on its own when you create (spawn) resources from the resource

group. The selection follows these rules:

-

Ignore all nodes and storage pools the current user has no access to

-

Ignore all diskless storage pools

-

Ignore all storage pools not having enough free space

The remaining storage pools will be rated by different strategies.

MaxFreeSpace-

This strategy maps the rating 1:1 to the remaining free space of the storage pool. However, this strategy only considers the actually allocated space (in case of thin-provisioned storage pool this might grow with time without creating new resources)

MinReservedSpace-

Unlike the “MaxFreeSpace”, this strategy considers the reserved space. That is the space that a thin volume can grow to before reaching its limit. The sum of reserved spaces might exceed the storage pool’s capacity, which is as overprovisioning.

MinRscCount-

Simply the count of resources already deployed in a given storage pool

MaxThroughput-

For this strategy, the storage pool’s

Autoplacer/MaxThroughputproperty is the base of the score, or 0 if the property is not present. Every Volume deployed in the given storage pool will subtract its definedsys/fs/blkio_throttle_readandsys/fs/blkio_throttle_writeproperty- value from the storage pool’s max throughput. The resulting score might be negative.

The scores of the strategies will be normalized, weighted and summed up, where the scores of minimizing strategies will be converted first to allow an overall maximization of the resulting score.

You can configure the weights of the strategies to affect how LINSTOR selects a storage pool for resource placement when creating (spawning) resources for which you did not specify a storage pool. You do this by setting the following properties on the LINSTOR controller object. The weight can be an arbitrary decimal value.

linstor controller set-property Autoplacer/Weights/MaxFreeSpace <weight> linstor controller set-property Autoplacer/Weights/MinReservedSpace <weight> linstor controller set-property Autoplacer/Weights/MinRscCount <weight> linstor controller set-property Autoplacer/Weights/MaxThroughput <weight>

To keep the behavior of the Autoplacer compatible with previous LINSTOR versions, all

strategies have a default-weight of 0, except the MaxFreeSpace which has a weight of 1.

|

| Neither 0 nor a negative score will prevent a storage pool from getting selected. A storage pool with these scores will just be considered later. |

Finally, LINSTOR tries to find the best matching group of storage pools meeting all

requirements. This step also considers other auto-placement restrictions such as

--replicas-on-same, --replicas-on-different, --x-replicas-on-different, --do-not-place-with,

--do-not-place-with-regex, --layer-list, and --providers.

Avoiding Colocating Resources When Automatically Placing a Resource

The --do-not-place-with <resource_name_to_avoid> argument specifies that LINSTOR should try to

avoid placing a resource on nodes that already have the specified, resource_name_to_avoid

resource deployed.

By using the --do-not-place-with-regex <regular_expression> argument, you can specify that

LINSTOR should try to avoid placing a resource on nodes that already have a resource deployed

whose name matches the regular expression that you provide with the argument. In this way, you

can specify multiple resources to try to avoid placing your resource with.

Constraining Automatic Resource Placement by Using Auxiliary Node Properties

You can constrain automatic resource placement to place (or avoid placing) a resource with nodes having a specified auxiliary node property.

This ability can be useful in a few different scenarios. One scenario is if you are trying to constrain resource placement within Kubernetes environments that use LINSTOR managed storage. For example, you might set an auxiliary node property that corresponds to a Kubernetes label. Refer to the “replicasOnSame” section within the “LINSTOR Volumes in Kubernetes” LINSTOR User Guide chapter for more details about this use case.

You can also use the --x-replicas-on-different argument to constrain automatic resource placement in a way that can be useful when using LINSTOR to manage storage resources across data centers, for example, in a stretched cluster architecture.

Because this argument takes a different form than the --replicas-on-same and --replicas-on-different arguments, it is disussed later in its own section, Ensuring automatic resource placement on different nodes for disaster recovery.

The arguments, --replicas-on-same and --replicas-on-different expect the

name of a property within the Aux/ namespace.

The --x-replicas-on-different command option also considers a LINSTOR object “different”, where the auxiliary property is not set.

For example, in a 5-satellite node cluster where nodes 1-2 have an auxiliary property site value of dc1, nodes 3-4 have a site value of dc2, and node 5 has no auxiliary property set, there would be three “different” node groups.

|

The following example shows setting an auxiliary node property, testProperty, on three LINSTOR

satellite nodes. Next, you create a resource group, testRscGrp, with a placement count of two

and a constraint to place spawned resources on nodes that have a testProperty value of 1.

After creating a volume group, you can spawn a resource from the resource group. For simplicity,

output from the following commands is not shown.

# for i in {0,2}; do linstor node set-property --aux node-$i testProperty 1; done

# linstor node set-property --aux node-1 testProperty 0

# linstor resource-group create testRscGrp --place-count 2 --replicas-on-same testProperty=1

# linstor volume-group create testRscGrp

# linstor resource-group spawn-resources testRscGrp testResource 100M

You can verify the placement of the spawned resource by using the following command:

# linstor resource list

Output from the command will show a list of resources and on which nodes resources LINSTOR has placed the resources.

+-------------------------------------------------------------------------------------+ | ResourceName | Node | Port | Usage | Conns | State | CreatedOn | |=====================================================================================| | testResource | node-0 | 7000 | Unused | Ok | UpToDate | 2022-07-27 16:14:16 | | testResource | node-2 | 7000 | Unused | Ok | UpToDate | 2022-07-27 16:14:16 | +-------------------------------------------------------------------------------------+

Because of the --replicas-on-same constraint, LINSTOR did not place the spawned resource on

satellite node node-1, because the value of its auxiliary node property, testProperty was

0 and not 1.

You can verify the node properties of node-1, by using the list-properties command:

# linstor node list-properties node-1 +----------------------------+ | Key | Value | |============================| | Aux/testProperty | 0 | | CurStltConnName | default | | NodeUname | node-1 | +----------------------------+

Unsetting Autoplacement Properties

To unset an autoplacement property that you set on a resource group, you can use the following command syntax:

# linstor resource-group modify <resource-group-name> --<autoplacement-property>

Alternatively, you can follow the --<autoplacement-property> argument with an empty string, as in:

# linstor resource-group modify <resource-group-name> --<autoplacement-property> ''

For example, to unset the --replicas-on-same autoplacement property on the testRscGrp that was set in an earlier example, you could enter the following command:

# linstor resource-group modify testRscGrp --replicas-on-same

Ensuring automatic resource placement on different nodes for disaster recovery

By using the --x-replicas-on-different argument when creating or modifying various LINSTOR objects, you can ensure LINSTOR automatically places resources on different LINSTOR node groups, where different values for an auxiliary property represent different groups.

The argument takes the following form:

--x-replicas-on-different <auxiliary-property-name> <positive-integer>

The positive integer value is the maximum number of resource replicas that LINSTOR can place on nodes that have the same value for the specified auxiliary property.

The most common use case for this feature is ensuring that there is always at least two local resource replicas, for high availability, and one off-site resource replica, for disaster recovery.

For example, to label each node by its physical location, assume that you set an auxiliary property, site, as follows for a LINSTOR cluster in a stretched cluster architecture:

+------------------------------------------------------------------+ | Node | NodeType | Addresses | AuxProps | State | |==================================================================| | linstor-ctrl-0 | CONTROLLER | [...] | | Online | | linstor-sat-0 | SATELLITE | [...] | Aux/site=dc1 | Online | | linstor-sat-1 | SATELLITE | [...] | Aux/site=dc1 | Online | | linstor-sat-2 | SATELLITE | [...] | Aux/site=dc2 | Online | | linstor-sat-3 | SATELLITE | [...] | Aux/site=dc2 | Online | | linstor-sat-4 | SATELLITE | [...] | Aux/site=dc2 | Online | +------------------------------------------------------------------+

Further assume that you created a resource group myrg associated with the same LINSTOR storage pool on all five satellite nodes.

You can enter the following command to create a new resource, myres, and ensure that LINSTOR places two replicas on one site and a third replica at a different site:

linstor resource-group spawn \ --x-replicas-on-different site 2 \ --place-count 3 \ myrg myres 200G

After LINSTOR creates the resource, a linstor resource list --resource myres command might show output similar to this:

+-------------------------------------------------------------------------------------+ | ResourceName | Node | Layers | Usage | Conns | State | CreatedOn | |=====================================================================================| | myres | linstor-sat-0 | DRBD,STORAGE | Unused | Ok | UpToDate | [...] | | myres | linstor-sat-1 | DRBD,STORAGE | Unused | Ok | UpToDate | [...] | | myres | linstor-sat-2 | DRBD,STORAGE | Unused | Ok | UpToDate | [...] | +-------------------------------------------------------------------------------------+

When creating the myres resource, by specifying 2 for the maximum number of resource replicas LINSTOR can place on either dc1 or dc2 nodes, and also specifying a place count of 3, you ensure that LINSTOR will place three resource replicas: two resources together on a site, for local high availability, and a third replica off-site, for disaster recovery purposes.

Other combinations of --x-replicas-on-different and --place-count number values are possible.

However, you should make sensible choices.

If you specified, for example, --x-replicas-on-different site 1 and --place-count 3, when creating the myres resource given the example LINSTOR cluster shown earlier, LINSTOR would refuse to create the resource and would show a “Not enough available nodes” error message.

Specifying --x-replicas-on-different $X 1 has exactly the same placement outcome as --replicas-on-different $X.

|

Here, the node labeling (by using an auxiliary property), together with the constraint that LINSTOR should place at most one resource replica on nodes with different values for the auxiliary property, while also asking LINSTOR to place three resource replicas, means that you are asking for LINSTOR to do something impossible.

You also need to know that, as implemented, the --x-replicas-on-different command considers a LINSTOR object “different”, where the auxiliary property is not set.

This is also true for the behavior of the --replicas-on-different option.

For example, consider the following LINSTOR cluster:

+------------------------------------------------------------------+ | Node | NodeType | Addresses | AuxProps | State | |==================================================================| | linstor-ctrl-0 | CONTROLLER | [...] | | Online | | linstor-sat-0 | SATELLITE | [...] | Aux/site=dc1 | Online | | linstor-sat-1 | SATELLITE | [...] | Aux/site=dc1 | Online | | linstor-sat-2 | SATELLITE | [...] | Aux/site=dc2 | Online | | linstor-sat-3 | SATELLITE | [...] | Aux/site=dc2 | Online | | linstor-sat-4 | SATELLITE | [...] | Aux/site=dc2 | Online | | linstor-sat-5 | SATELLITE | [...] | | Online | | linstor-sat-6 | SATELLITE | [...] | | Online | +------------------------------------------------------------------+

If you were to create a resource, myres, by using the “impossible” command mentioned earlier, the command would this time succeed.

linstor resource-group spawn \ --x-replicas-on-different site 1 \ --place-count 3 \ myrg myres 200G

A successful resource placement that fulfills the specified constraints might be as follows:

+-------------------------------------------------------------------------------------+ | ResourceName | Node | Layers | Usage | Conns | State | CreatedOn | |=====================================================================================| | myres | linstor-sat-0 | DRBD,STORAGE | Unused | Ok | UpToDate | [...] | | myres | linstor-sat-2 | DRBD,STORAGE | Unused | Ok | UpToDate | [...] | | myres | linstor-sat-5 | DRBD,STORAGE | Unused | Ok | UpToDate | [...] | +-------------------------------------------------------------------------------------+

Because the site auxiliary property was not set on the linstor-sat-5 node, the node counted as belonging to a different group, for purposes of the --x-replicas-on-different constraint.

In this example cluster, there are three different node groups for the site auxiliary property: dc1, dc2, and a group where the auxiliary property is not set on some nodes.

For this reason, LINSTOR was able to place three resources, fulfilling the --place-count constraint, while also fulfilling the --x-replicas-on-different constraint.

Constraining Automatic Resource Placement by LINSTOR Layers or Storage Pool Providers

You can specify the --layer-list or --providers arguments, followed by a comma-separated

values (CSV) list of LINSTOR layers or storage pool providers, to influence where LINSTOR places

resources. The possible layers and storage pool providers that you can specify in your CSV

list can be shown by using the --help option with the --auto-place option. A CSV list of

layers would constrain automatic resource placement for a specified resource group to nodes that

have storage that conformed with your list. Consider the following command:

# linstor resource-group create my_luks_rg --place-count 3 --layer-list drbd,luks

Resources that you might later create (spawn) from this resource group would be deployed across three nodes having storage pools backed by a DRBD layer backed by a LUKS layer (and implicitly backed by a “storage” layer). The order of layers that you specify in your CSV list is “top-down”, where a layer on the left in the list is above a layer on its right.

The --providers argument can be used to constrain automatic resource placement to only storage

pools that match those in a specified CSV list. You can use this argument to have explicit

control over which storage pools will back your deployed resource. If for example, you had a

mixed environment of ZFS, LVM, and LVM_THIN storage pools in your cluster, by using the

--providers LVM,LVM_THIN argument, you can specify that a resource only gets backed by either

an LVM or LVM_THIN storage pool, when using the --place-count option.

The --providers argument’s CSV list does not specify an order of priority for the list

elements. Instead, LINSTOR will use factors like additional placement constraints,

available free space, and LINSTOR’s storage pool selection strategies that were previously

described, when placing a resource.

|

Automatically Placing Resources When Creating Them

While using resource groups to create templates from which you can create (spawn) resources from

is the standard way to create resources, you can also create resources directly by using the

resource create command. When you use this command, it is also possible to specify arguments

that affect how LINSTOR will place the resource in your storage cluster.

With the exception of the placement count argument, the arguments that you can specify when you

use the resource create command that affect where LINSTOR places the resource are the same as

those for the resource-group create command. Specifying an --auto-place <replica_count>

argument with a resource create command is the same as specifying a --place-count

<replica_count> argument with a resource-group create command.

Using Auto-place to Extend Existing Resource Deployments

Besides the argument name, there is another difference between the placement count argument for

the resource group and resource create commands. With the resource create command, you can

also specify a value of +1 with the --auto-place argument, if you want to extend existing

resource deployments.

By using this value, LINSTOR will create an additional replica, no matter

what the --place-count is configured for on the resource group that the resource

was created from.

For example, you can use an --auto-place +1 argument to deploy an additional replica of the

testResource resource used in a previous example. You will first need to set the auxiliary

node property, testProperty to 1 on node-1. Otherwise, LINSTOR will not be able to deploy

the replica because of the previously configured --replicas-on-same constraint. For

simplicity, not all output from the commands below is shown.