Introduction

This blog post will examine the performance of DRBD® in diskless mode. DRBD version 9.0 introduced a feature that is now used in nearly all LINSTOR® integrated clusters: DRBD diskless attachment, otherwise known as DRBD clients. With this feature, you can have a DRBD node which has no local replica itself. Essentially, the client node simply reads and writes to the storage of the peer systems by using the DRBD connections.

Because DRBD communication is designed to be lightweight and efficient the team thought it might be interesting to see how it compares to another popular solution for network-attached block storage: iSCSI. For both tests, the back-end storage is a single Intel Optane NVMe disk (375G model SSDPE21K375GA). The network devices used for this test were 100Gb/s ConnectX-5 cards (MT27800).

For this test, I used systems with 16 core Intel® Xeon® Silvers at 2.60GHz. I used 200G LVM thick volumes which I tested locally at 456k IOPS and 1819MiB/s. This initial test was only done with sequential write throughput using 4k block sizes.

Note that there was no additional tuning done with regard to the network or storage stack. There was no additional tuning for iSCSI or DRBD either. For this test, everything remained default per Alma Linux 9.2 on the “diskful” DRBD and iSCSI target node, and per Alma Linux 8.8 on the DRBD client and iSCSI initiator node. For the testing, I used DRBD version 9.2.12 and TargetCLI version 2.1.53.

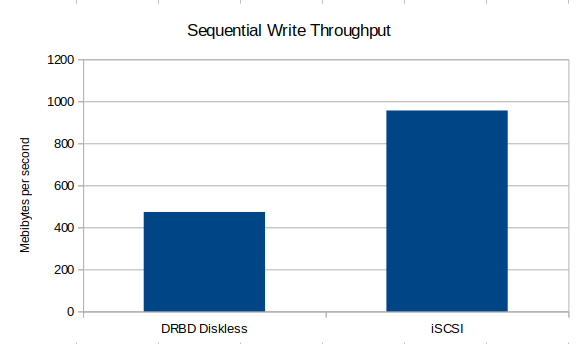

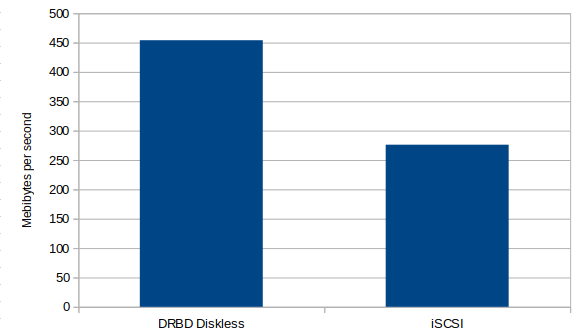

Sequential write performance

All testing was done with three 30 seconds runs by using the Flexible I/O tester. The reported results were then averaged from three runs. For the sequential writes I used the specific below test:

# fio --readwrite=write --runtime=30s --blocksize=4k --ioengine=libaio \

--numjobs=16 --iodepth=16 --direct=1 --filename=/dev/drbd10 \

--name=test0 --group_reporting

Note that iSCSI generally outperformed DRBD in these sequential write tests. As iSCSI is treated as a SCSI device by the local system it is able to take advantage of some I/O merging by using the SCSI I/O schedulers that is simply not available within DRBD.

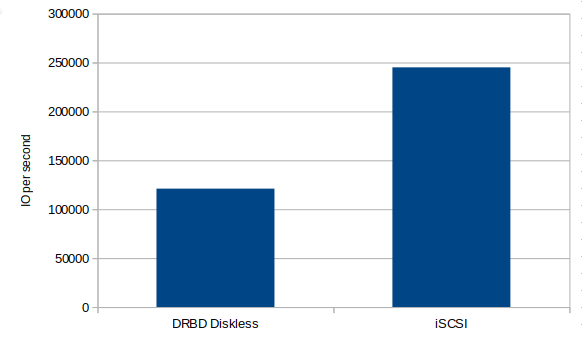

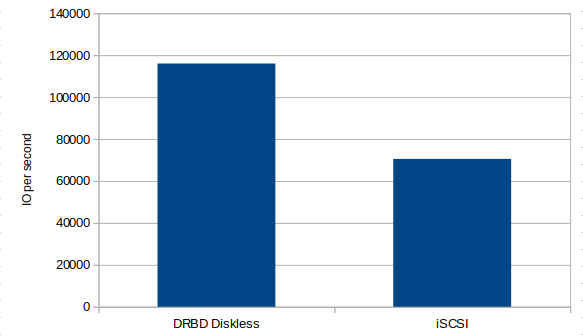

Random write performance

Similar to the previous tests, I again ran three 30 seconds long tests by using Fio. I then averaged the results of the individual tests to get the results reported here. For the random write test I used this specific test:

# fio --readwrite=randwrite --runtime=30s --blocksize=4k --ioengine=libaio \

--numjobs=16 --iodepth=16 --direct=1 --filename=/dev/drbd10 \

--name=test0 --group_reporting

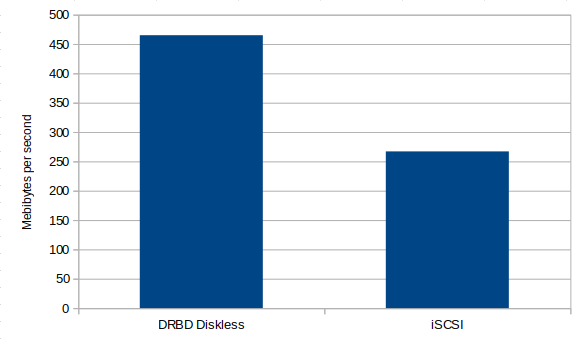

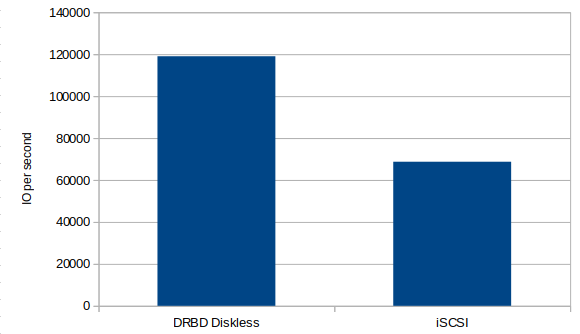

Random read performance

Testing random read performance was nearly identical to other testing. I just switched randwrite for randread, ran three 30 seconds tests, and then averaged the performance results.

# fio --readwrite=randread --runtime=30s --blocksize=4k --ioengine=libaio \

--numjobs=16 --iodepth=16 --direct=1 --filename=/dev/drbd10 \

--name=test0 --group_reporting

The results pretty much speak for themselves. While iSCSI dominates with sequential write performance, which I suspect is due to the SCSI I/O schedulers and write merges, the DRBD diskless functionality excels at random workloads. The lightweight nature of DRBD’s diskless mode might be exactly what you have in mind if you need block storage with great performance for applications with random I/O patterns, such as databases, messaging queues, and others.