This article itemizes and explores LINSTOR® notable features for software-defined storage (SDS), high availability (HA), and disaster recovery (DR). The intent behind writing this article is to help orient someone who might be unfamiliar with LINSTOR. However, even someone familiar with LINSTOR might find it helpful for either review, promoting LINSTOR to others, or perhaps learning about a less explored or overlooked feature.

LINSTOR background

The LINBIT® team released the open source software-defined storage (SDS) management tool, LINSTOR, in 2016. The original intent was for LINSTOR to replace an existing tool for creating and managing DRBD® resource configuration files in Linux clusters. LINSTOR development steadily grew. LINBIT developers added features and drivers in both directions: south-bound features such as snapshots, LUKS, dm-cache, dm-writecache, or NVMe storage layers, and north-bound drivers for integrating directly with other platforms such as CloudStack, Kubernetes, OpenNebula, OpenShift, OpenStack, Oracle Linux Virtualization Manager, Proxmox VE, and others. You can use LINSTOR on bare metal, in a cloud or hybrid cloud, virtualized, or in containers.

Creating and managing DRBD-replicated block storage resources is not a strict requirement for using LINSTOR but it is still the most frequent use case. By using DRBD to replicate data in a cluster of nodes, you open doors for creating highly available storage. This is the basic building block for unlocking many of the technical benefits discussed in this article.

Some of the more notable LINSTOR features are listed below. Because LINSTOR has many features and benefits, the list items are linked to the sections that discuss them. You can jump around in the article and explore the features in the order that interests you.

LINSTOR notable features

- Performance: low CPU and memory consumption

- Separate control and data planes

- Your data remains yours

- Monitoring and alerting

- Snapshots and backup shipping for disaster recovery

- Diskless connections to storage resources

- Data locality

- Automatic storage provisioning within integrated platforms

- Flexible autoplacement options for storage resources

- Resource templating

- Support for stretched clustering

- API and libraries for customized deployments

Performance

Performance is a LINSTOR feature that has a few aspects. There is DRBD replication performance, there is performance related to the LINSTOR software itself, and there is the impact of running LINSTOR and DRBD software on the performance of other applications and services in your clusters.

LINSTOR CPU and memory consumption

LINSTOR and DRBD themselves consume very little CPU resources. One consideration though is that TCP/IP data replication over the network (via DRBD) does involve the CPU. Depending on the I/O related to DRBD replicated storage, and your network bandwidth, this can create CPU bottlenecks if not configured for properly. A less CPU intensive, although costlier in terms of equipment, alternative would be to use the DRBD RDMA transport protocol.

However, all that said, LINSTOR and DRBD have been shown to consume fewer CPU resources in independent testing, compared to other data replication technologies.

- “Comparing LINSTOR, Ceph, Mayastor, & Vitastor Storage Performance in Kubernetes”

- “Independent Performance Testing of DRBD by E4”

- “DRBD/LINSTOR vs Ceph vs StarWind VSAN: Proxmox HCI Performance Comparison” 1

Similar to its demands on CPU resources, LINSTOR memory consumption is also modest. You should plan to reserve a minimum of around 700MiB of memory for each running instance of the LINSTOR controller or satellite services. DRBD needs about 32MiB of memory per 1TiB of replicated storage. When considering operating system, user space, and buffer cache memory demands, a practical minimum amount of memory for production systems or systems with larger volumes is 64GB of RAM per storage node. If you are using LINSTOR and DRBD in a hyperconverged environment, consider significantly more (256-1024GB of memory) to comfortably accommodate application and platform workloads and DRBD memory requirements.

You can learn more about LINSTOR and DRBD hardware requirements and recommendations in a LINBIT knowledge base article on the topic, “LINSTOR and DRBD Hardware Considerations”.

Separate control and data planes

LINBIT developers designed LINSTOR with separate control and data planes. LINSTOR storage resources and their storage layers, such as DRBD devices and LVM or ZFS logical volumes, operate independently of LINSTOR services. After creating them by using LINSTOR, you could stop and disable LINSTOR running services, for example to do an upgrade, and your storage resources, and the applications that rely on them, would continue to work.

Your data remains yours

In plain speak, LINSTOR does not hijack your data when you use it to manage storage in your deployments. At all times, you can access and recover your data if you need to by using common tools familiar to Linux system administrators, independently of DRBD or LINSTOR working or running on your system.

DRBD replicates storage blocks that are most often on top of LVM or ZFS logical volumes. To do this, DRBD creates a virtual block device that stores some metadata at the end of the device and your data from the start of the device. If your underlying physical storage is healthy enough to read from the “your data” blocks, you always have access to your data in an unaltered form, just as it was written to the device and underlying physical storage. This is unlike some replication solutions that might use an algorithm to transform your data before storing it.

Monitoring and alerting

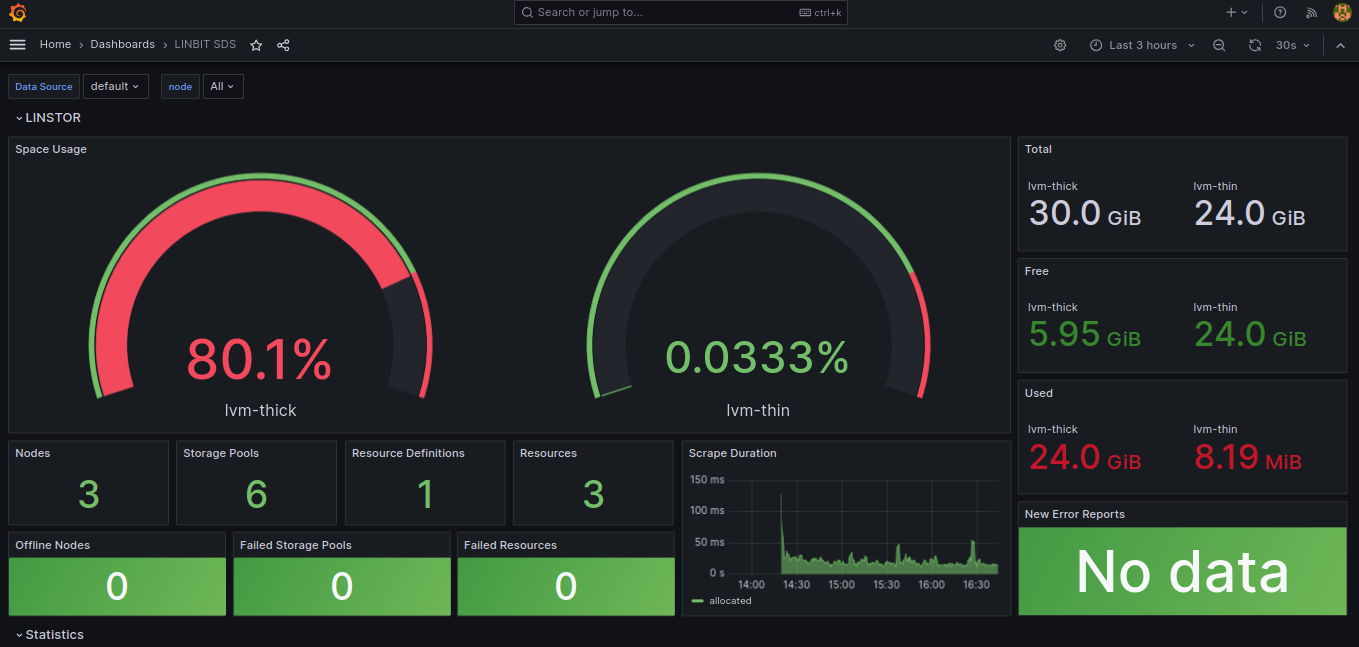

LINSTOR has Prometheus endpoints and alerts for LINSTOR and DRBD states. You can use these to create alerting rules or Grafana dashboards to help monitor your deployment and resources so that you and your team can react when needed.

To make DRBD monitoring endpoints more easily accessible, you can use another LINBIT open source software, DRBD Reactor, to expose them through its Prometheus plugin. If you are following LINSTOR best practices and have made the LINSTOR controller service highly available in your cluster, DRBD Reactor will already be there for you to use for exporting Prometheus metrics, at least on nodes that can potentially run the LINSTOR controller service. For monitoring by using Prometheus metrics, you would also need to install and configure DRBD Reactor on any LINSTOR satellite nodes, if these are not also potential LINSTOR controller nodes. A LINBIT blog article, “Monitoring Clusters Using Prometheus & DRBD Reactor”, can tell you more on the topic of monitoring with LINBIT software.

Snapshots and backup shipping for disaster recovery

If you use LINSTOR to manage storage volumes created on top of thin-provisioned LVM or ZFS logical volumes, then you can take advantage of LINSTOR snapshotting features. Snapshots are point-in-time copies of the data in storage volumes. Snapshots can either be a full copy of data or else just the data differences since the last snapshot.

By default, snapshots are stored locally and consume storage pool space. You can use local snapshots as backups, in case you need to restore your data to a known good point-in-time. By using LINSTOR, you can also ship these snapshots to another LINSTOR cluster or to S3 compatible storage such as Amazon S3, MinIO, Storj, or others. Snapshotting and snapshot shipping can be done either manually or automatically, on a schedule.

Diskless connections to storage resources

LINSTOR supports diskless connections to storage resources. Diskless connections are a means by which applications and services can connect to storage over a network connection to a cluster node, without the need for the data to exist physically on that node. Diskless connections have many uses.

LINSTOR can use a diskless node to act as a tiebreaker for DRBD quorum purposes. DRBD quorum is a feature that helps prevent data divergence, so called split-brains, in clusters. By using the diskless connections feature, a node acting solely in a tiebreaker role only needs a network connection to the other nodes and a minimum amount of CPU and storage to run an operating system and the LINSTOR satellite service. This can help reduce your deployment costs.

You might also benefit from LINSTOR diskless connections when compared to other I/O over the network technologies, such as iSCSI, for certain I/O patterns. An article on the LINBIT blog, “DRBD Client (Diskless Mode) Performance” explores this topic in more detail.

Data locality

By using LINSTOR, an administrator can provision storage volumes on local disks, assign diskless connections, or create iSCSI, NFS, or NVMe-oF targets by using LINSTOR Gateway for even more ways to provision and attach storage in a cluster. LINSTOR can help system administrators with data locality by combining these provisioning options with other LINSTOR features, such as resource templating, and flexible autoplacement options.

LINSTOR conceptual objects such as nodes, storage pools, resources groups, along with object labeling, help an administrator create logical groupings such as racks, clusters, data centers, fast storage, slow storage, and others. By using these features and options, an administrator can ensure that data is as close to the applications and services that might rely on it as possible.

Flexible autoplacement options for storage resources

When you use LINSTOR to create and manage data storage resources in a cluster of nodes, you can use the LINSTOR autoplacement feature to help distribute these resources in a sensible way. LINSTOR has an autoplacement feature that by default, will first try to place new resources on nodes that have less consumed storage than other nodes. You also have the flexibility to change or define autoplacement settings, such as affinities and anti-affinities. You can label LINSTOR nodes and then constrain LINSTOR autoplacement by setting placement affinities, such as “prefer to place new resources on nodes with label x”, or anti-affinities, such as “prefer not to place new resources on nodes with label y”. Besides using node labels, you can also constrain where LINSTOR places storage resources based on other already placed resources. Similar to setting constraints based on node labeling, you can configure LINSTOR to prefer not to place new resources on nodes with existing resources that you specify, either by name or by a matching regular expression.

Thanks to the LINSTOR object-oriented design, you can also take advantage of LINSTOR templating and set autoplacement options and constraints on either a per resource basis or by using resource groups. Any resource created from a resource group will inherit properties set on the group. You can learn more about LINSTOR autoplacement topics in the LINSTOR User Guide.

Resource templating

As mentioned in the section describing LINSTOR autoplacement options, you can use LINSTOR resource groups to define templates from which you can then create many resources of the same type, either manually, or automatically through a platform integration, for example. Some LINSTOR users use LINSTOR to deploy thousands of storage resources across hundreds of nodes. Doing this is easier by using LINSTOR resource groups and defining autoplacement strategies.

Automatic storage provisioning within integrated platforms

There are native LINSTOR integrations for many cloud, virtualization, and container management platforms. Platforms that you can integrate LINSTOR with include: CloudStack, Incus, Kubernetes, OpenNebula, OpenShift, OpenStack, Proxmox VE, Xen Orchestrator, and others. By using platform integrations and configuring LINSTOR storage pools, LINSTOR automatically provisions storage for you within these platforms, as you need it, using workflows native to each platform.

Abstracting storage behind the scenes with LINSTOR gives you the flexibility to change or scale your physical storage back ends to adapt to business changes, customer demands, or environmental factors, without having to disrupt or change the applications that consume the storage, or how your users interface with different storage back ends. You can find more details about LINSTOR integrations in the LINSTOR Integrations chapter in the LINSTOR User Guide. A blog article, “Creating OpenShift Persistent Storage with HA & DR Capability by Using LINSTOR” explores how LINSTOR can bring HA and DR benefits to OpenShift, as an example.

Support for stretched clustering

If you might be considering a stretched storage cluster architecture for redundancy and disaster recovery, LINSTOR can help. While this design architecture works best across campus or metro networks with low-latency, near LAN speed connections, you have the flexibility through LINSTOR to use other less-than-in-real-time replication strategies if you need to. This might help in cases where data replication across the stretched cluster connections might become a bottleneck.

LINSTOR can also help you balance storage resource allocation across a stretched cluster. This way, you can move beyond an active/passive design and take advantage of your investment in equipment, and not let storage resources go unused until a disaster event.

You can find more details about the stretched cluster design and LINSTOR in the blog article, “The Stretched Cluster Disaster Recovery Strategy”.

API and libraries for customized deployments

LINBIT developers created and maintain a REST API and three API libraries:

These open source API libraries make it possible to build customized LINSTOR-based applications or platform integrations. The Vates-built XOSTOR hyperconvergence solution for Xen Orchestra and the LINSTOR storage driver for Incus are two examples of third parties who have built their own solutions from these open source libraries.

Conclusion

Hopefully this article about LINSTOR features has helped you to learn more about the benefits that LINSTOR can offer. You can flexibly deploy LINSTOR on bare metal, in containers, or virtual machines, on its own or integrated with a platform, or in a disaggregated or hyperconverged environment. If provisioning and managing block storage for high availability or disaster recovery use cases is calling you, or if you need a persistent block storage solution for traditionally ephemeral things such as containers running in Kubernetes, or for I/O demanding applications such as databases or messaging queues, LINSTOR can likely help you.

- When evaluating StarWind’s performance testing results, keep in mind that StarWind used DRBD’s TCP kernel model, rather than its RDMA kernel module. It is also not recommended to use RAID 5 for DRBD’s metadata, as was done in Starwind’s testing. Using non-parity RAID (such as RAID 1) for DRBD’s metadata will allow for better performance. Finally, consider that DRBD used the least amount of CPU among the evaluated solutions through all of the tests that StarWind ran.↩︎